The Risk Riddle: Why False Precision Breaks Risk Management

Two risks with identical $10M annualized loss leave leadership frozen like Buridan’s Ass, unable to choose between options that look mathematically equal. The paralysis disappears once you move beyond point estimates and acknowledge uncertainty, tail risk, and control, revealing that the risks were never truly the same.

The leadership team is deadlocked. Two risks, both representing $10M in annual expected loss, yet they can't agree on priorities. It's a modern version of Buridan's Ass: the philosophical paradox where a donkey starves between two equally appealing piles of hay, unable to choose.

A donkey, paralyzed between equal choices, dies from indecision; a paradox posed by philosopher Jean Buridan.

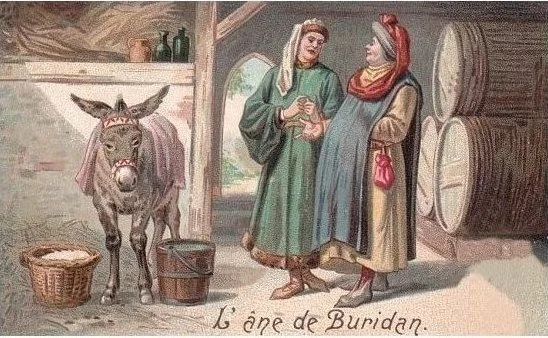

But here's the twist: these risks aren't actually identical. When we break it down a little further, we see:

Risk A: Data breach with 10% annual probability and $100M impact

Risk B: Regulatory shutdown with 1% annual probability and $1B impact

The math is identical. Both represent $10M in annualized risk. Yet watch what happens when you ask different stakeholders which risk keeps them up at night.

The Predictable Split

The CEO immediately points to the regulatory risk: "That's an existential threat to our business. We need a crisis management plan."

The CISO dismisses it entirely: "The data breach is the clear and present danger. That's where we need to focus our resources."

The Chief Risk Officer sighs: "Same math, totally different priorities. This is exactly why our risk discussions go in circles."

Same mathematical risk. Completely different reactions. Welcome to the Risk Riddle.

The False Precision Trap

Here's the problem: those point estimates are misleading. When we say "10% probability" and "$100M impact," we're creating an illusion of precision that doesn't exist in the real world.

The CEO's gut reaction isn't irrational; it's responding to uncertainty that our models hide. That "1% regulatory shutdown probability" could realistically range from 0.1% to 5%, depending on regulatory climate, pending investigations, and compliance posture. The impact could span $500M to $2B depending on timing, customer contracts, and recovery options. For existential risks, those uncertainty bounds matter enormously.

Similarly, the CISO knows that "10% data breach probability" is meaningless without context. Is that with current security controls, or after the planned infrastructure upgrade next month? During peak attack season? The range might actually be 3% to 25%, and crucially, it's something they can influence through action. The impact varies just as wildly; the incident response team could catch it mid-exfiltration and limit the blast radius, or they might not detect it for months while the FTC decides to throw the book at the company and make an example.

When Averages Lie

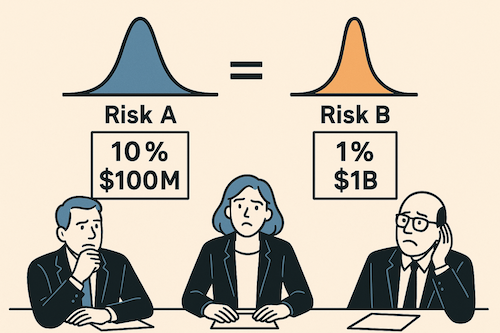

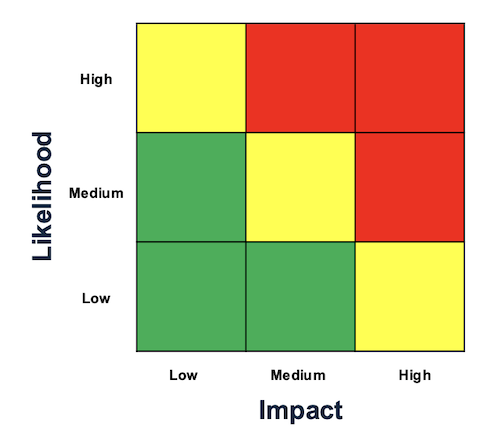

Red-yellow-green matrices collapse complex, multidimensional uncertainties into deceptively simple outputs

Traditional risk assessment, whether through red-yellow-green matrices or precise probability calculations, collapses complex, multidimensional uncertainties into deceptively simple outputs. Risk matrices reduce everything to "High-Medium-Low," while quantitative models that produce point estimates create an illusion of precision.

First, it misaligns stakeholder responses. The CEO sees "1% regulatory risk" and thinks "catastrophic tail risk with massive uncertainty." The CFO sees the same number and thinks "acceptable probability, let's buy insurance."

Second, it obscures actionability. A "10%" data breach risk means nothing without understanding the uncertainty range and what drives it. Are we 95% confident it's between 8-12%? Or could it realistically be anywhere from 2-30%?

Third, it prevents meaningful risk conversations. When you present point estimates, discussions devolve into debating whether the probability is "really" 10% or 12%. The more important strategic questions - risk appetite, response options, acceptable uncertainty levels - get lost.

The Distribution Solution

The answer isn't abandoning risk analysis. It's embracing the uncertainty that already exists.

Instead of "10% probability, $100M impact," express it as ranges:

Data breach: "We're looking at about 10% probability as our best estimate, though it could realistically range from 5% to 15%. Impact-wise, we're modeling around $100M typically, but we could see anything from $50M to $200M depending on how bad it gets."

Regulatory shutdown: "This one's much less likely - we're thinking around 1% probability, but the uncertainty is huge, anywhere from 0.1% to 3%. The scary part is when it hits, we're probably looking at $1B in losses, potentially ranging from $500M to $2B depending on scope and timing."

All of a sudden, we're having much more interesting conversations about where to apply our resources: what to cover with insurance, where to buy down risk, how much capital we need to make sure we have to cover these events, where we need to invest in mitigation versus resiliency. We've upgraded our conversations from arguing about ALEs to something much richer.

The data breach has higher likelihood of crossing our pain threshold, but the regulatory shutdown has catastrophic tail risk that could dwarf our loss assumptions entirely.

Probabilistic Thinking in Practice

When you model risks as distributions rather than point estimates, several things happen:

Risk conversations improve. Instead of arguing about whether something is 8% or 12% likely, you're discussing acceptable uncertainty levels and response strategies across probability ranges.

Decision-making becomes more robust. You can stress-test strategies against the full range of possibilities rather than optimizing for a single scenario.

Stakeholder alignment emerges naturally. The CEO's focus on existential threats and the CISO's emphasis on controllable risks both become rational responses to properly expressed uncertainty.

Beyond the Riddle

The Risk Riddle reveals something fundamental about how humans process uncertainty. We don't think in point estimates—we think in ranges, possibilities, and scenarios. When our risk models don't match how we naturally reason about uncertainty, the models become obstacles rather than tools.

The solution isn't more sophisticated mathematics. It's honest acknowledgment of what we don't know and modeling that reflects reality's inherent uncertainty.

Moving from point estimates to uncertainty ranges requires fundamentally rethinking your risk process - how you collect data, build models, and structure conversations. It's not a quick fix, but organizations that make this shift find their risk discussions become genuinely strategic rather than debates over decimal places. The uncertainty was always there; now you're just being honest about it.

False precision doesn't just break risk models. It breaks the conversations that matter most.

“That Feels Too High”: A Risk Analyst's Survival Guide

When stakeholders say your quantitative risk numbers don't "feel right," there are three main reasons: you missed something they know, cognitive bias is affecting their judgment, or you failed to communicate the numbers clearly. The key is to listen first and diagnose which reason applies, because their discomfort often contains the most valuable feedback for improving your risk analysis.

Photo by Danielle Cerullo on Unsplash

Years back, I walked into a meeting with our Chief Risk Officer, ready to present a cloud outage risk assessment I was genuinely proud of. I'd spent three weeks on it: interviewing subject matter experts, validating assumptions with comprehensive data, running scenarios, testing edge cases, and putting together a deck showing potential losses and the probability of exceeding various thresholds. Real data, not the "trust me bro" variety.

I finished presenting and waited for questions about our risk exposure, control gaps identified, the return on investment ratios we would get with planned mitigations, or the methodology.

Instead, there was a pause. Then: "Hmm... that feels too high."

Just like that, three weeks of careful analysis became a discussion about feelings. All that work (the interviews, the data validation, the scenario testing) suddenly felt secondary to one person's gut reaction.

After years of getting this exact feedback, here's what I've learned: when someone says your risk numbers don't "feel right," they're not necessarily wrong. They're just speaking a different language. To be effective in this business, you need to become fluent in both quantitative analysis and the language of executives.

Three Reasons Your Numbers Get Questioned

That wasn't the first time this has happened, and it won't be the last. After countless meetings of "that feels high" or "that feels low" or "that feels off," I've identified three reasons why risk numbers might not pass the "feels right" test.

Reason #1: You Got Something Wrong

This is the humbling one and the most valuable feedback you can get.

Sometimes the stakeholder is right. Not because they ran their own Monte Carlo simulation, but because they have deep, battle-scarred knowledge of how things work in the operating environment at the organization, from failing systems they know about to a changing threat environment.

I once modeled a scenario that looked solid on paper. All the controls were documented as working correctly, the technology performed well in testing, and the processes seemed robust. The CTO took one look at my numbers and said, "This feels low."

It turned out, the CTO had operational knowledge that wasn't captured in any of my sources: things they'd seen fail before, quirks in how systems actually behaved under stress, dependencies that weren't obvious from the documentation. Experience that you can't easily quantify but that fundamentally changes the risk picture.

The Bayesian Approach:

When someone challenges your numbers, treat their feedback as new information that should update your beliefs, not as a personal attack on your work.

Ask questions like:

"What specific part feels wrong to you?"

"What have you seen happen in practice that I might have missed?"

"Are there controls that look good on paper but fall apart under pressure?"

"What would you expect this number to be, and why?"

I've found real issues this way: incorrect assumptions about control effectiveness, data gaps, coverage problems, bad or outdated metrics, or scope errors that somehow slipped past multiple reviews.

This isn't about being wrong. It's about being willing to update your model when you get new information. That's good science and good risk management.

Reason #2: Cognitive Bias Is Getting in the Way

Sometimes the pushback isn't about missing information. It's that human brains evolved to avoid being eaten by tigers, not to intuitively understand probability distributions.

Daniel Kahneman's work in "Thinking Fast and Slow" is incredibly relevant here. Confirmation bias makes people favor information that supports what they already believe. Anchoring bias makes them stick to the first number they heard. Availability bias makes recent headlines seem more probable than your carefully calculated statistics.

I once presented a model showing that our risk of a major data breach was relatively low, about 5% annually. The CISO immediately pushed back: "That feels way too low. We hear about breaches happening all the time!"

This is classic availability bias. The constant stream of breach headlines made high-impact incidents feel more probable than they actually were.

How to Navigate Cognitive Bias:

You can't logic someone out of a position they didn't logic themselves into. However, you can work with their cognitive patterns:

Acknowledge their experience first: "I understand why this feels that way given what we see in the news..."

Find common ground before highlighting differences

Use analogies and stories that work with natural thinking patterns

Focus on the decision at hand, not winning the debate

Remember: you're not there to reprogram anyone's brain. You're there to help them make better decisions despite cognitive quirks we all have.

Reason #3: Risk Communication Is Failing

Sometimes the disconnect is simpler than bias or missing knowledge. This is probably the most fixable problem, and the one we mess up most often.

You put up a slide with a beautiful loss exceedance curve. You're proud of it. It tells a story! It shows uncertainty!

Your audience sees what looks like a confusing chart they can't interpret.

Many information security folks have spent their entire careers working with heat maps and red-yellow-green traffic lights. They've never had to interpret a confidence interval or understand what a "95th percentile" means.

I've watched experienced risk managers (smart people who could run circles around me in operational knowledge) completely mix up mean and mode. I've seen executives stare at a five-number summary like it was written in a foreign language. This isn't because they're incompetent. It's because we've done a poor job of translating quantitative concepts into clear language.

Ironically, I've had more trouble communicating quantitative concepts to my peers in risk and security than to business people. Finance people, econ majors, and MBAs intuitively understand the language of risk.

The Art of Risk Translation

Think of yourself as a translator. You need to know your audience well enough to present the same insights in different ways:

For visual learners: Charts and graphs, but with clear explanations

For story people: Turn your data into narratives they can follow

For bottom-line focused: Lead with the key insight and recommendation

For detail-oriented: Provide the methodology for those who want it

I once had a CISO who couldn't make sense of probability distributions but understood perfectly when I explained the analysis as "budgeting for bad things that might happen." Same concept, different language.

It's Not About Comfort, It's About Trust

Quantitative risk isn't supposed to feel comfortable. It's supposed to be useful.

When someone says your numbers don't feel right, that's not a failure. It's information. It's telling you something important about their knowledge, their cognitive patterns, or your communication approach.

Your job isn't to make everyone feel good about your Monte Carlo simulations. Your job is to build enough trust and understanding that people can make better decisions, even when those decisions involve uncomfortable levels of uncertainty.

Sometimes that means fixing your model because they caught something you missed. Sometimes it means working around cognitive biases. Sometimes it means explaining the same concept several different ways until you find the one that clicks.

The key is always listening first. Engage with the discomfort instead of dismissing it.

A Simple Diagnosis

The next time someone questions your risk numbers, work through these three questions in order:

1. Could they know something I don't? (Missing Information)

Ask: "What specific part feels wrong to you?"

Listen for operational details, historical patterns, or system quirks

Probe: "What have you seen happen in practice that I might have missed?"

2. Are they anchored to a different reference point? (Cognitive Bias)

Look for recent headlines, personal experiences, or industry incidents affecting their perception

Check if they're comparing to outdated baselines or different scenarios

Notice emotional language or "gut feeling" responses

3. Do they understand what I'm showing them? (Communication Gap)

Observe body language during technical explanations

Ask: "How would you explain this risk to your team?"

Test comprehension: "What's the key takeaway for you?"

Start with #1. Most valuable feedback comes from operational knowledge you missed. Only move to #2 and #3 if you're confident your analysis is solid.

The Bottom Line

The best risk analysis in the world is worthless if it sits on a shelf because nobody trusts it or understands it. Building that trust requires more than just getting the math right. It requires getting the human part right, too.

Don't get defensive the next time someone says your numbers don't feel right. Get curious. Somewhere in that conversation is the key to making your quantitative work actually useful in the real world of organizational decision-making.

The next time someone questions your numbers, remember this: their discomfort might be the most valuable feedback you get. Listen to it. That's ultimately what matters most.

Six Levers That Quietly Change Your Risk and How to Spot Them

Most people think risk only moves when you add controls, but five other hidden forces are quietly reshaping your exposure behind the scenes. This post breaks down the six levers that actually move the math, so you can stop treating risk like a snapshot and start reading it like a live feed.

Photo by Ian Parker on Unsplash

If there’s one thing I’ve mastered, it’s doing risk wrong, which is how I learned to do it less wrong. I developed this framework after years of watching risk models buckle under real-world pressure. There are two early-career blunders that still live rent-free in my head.

Back in 2011, I was a mid-level risk analyst at a regional bank. Each quarter I refreshed our “existential technology risk” deck for the C-suite and board: classic red, yellow, green heat maps. Life was good. I turned reds to yellows, yellows to greens, and everyone applauded:

“Look at the ROI on our security spend. Risk keeps going down!”

Mistake 1: We treated controls as the only thing that moved risk. If we spent money, risk went down. That was the assumption. Anything else? Unthinkable.

Mistake 2: Then came Operation Payback and a wave of massive DDoS attacks. Suddenly our exposure felt higher, but how do you show that with a traffic light? We had no way to reflect real-world spikes unless we cranked a color back up and undermined our own narrative.

That moment made something clear: controls are just one lever, and often not the biggest one. Most changes in risk come from forces far outside your walls.

Since then, I’ve seen six quiet but powerful levers reshape risk across industries and incident types. These shifts don’t always show up in your dashboards, but they absolutely move the math. Controls are only the first.

Let’s walk through all six and break down what each one does to the two things that matter most in risk:

Frequency (how often a loss event occurs)

Impact (how much it hurts if and when it does occur)

Let’s see what pushes each of them around.

Six Levers That Quietly Change Your Risk

Photo by Hubert Neufeld on Unsplash

Below, arrows show where each lever usually nudges those numbers: ▲ = up, ▼ = down, ↔ = no direct change, ◇ = could swing either way.

1. Internal Security Posture & Control Effectiveness

This category is obvious because we all know that investments in controls (should) drive down risk, but consider the entire internal security posture when assessing or reassessing risk.

New controls

Switching to passkeys, finally enforcing SSO, MFA on admin accounts, encryption, tokenization, etc (frequency ▼, impact ▼) .Control failure or decay / configuration drift

A TLS certificate expires, the “temporary” allow‑all rule you added for troubleshooting never gets removed, or the nightly backup job has silently failed for weeks. Nothing outside changed, but weak points opened inside (frequency ▲, impact ▲).Control obsolescence as threats adapt

SMS codes were fine until SIM‑swap kits became a click‑to‑buy service; an on‑prem IDS can’t see into your encrypted traffic; SHA‑1 signatures are now crackable on a laptop (frequency ▲, impact ▲).Headcount & skill shifts

Your only cloud‑security engineer leaves and the backlog of misconfig alerts piles up (frequency ▲, impact ▲). Hire a seasoned DevSecOps lead and those arrows reverse (frequency ▼, impact ▼).Asset & data growth

You spin up dozens of new microservices, start logging user biometrics, or expose a public GraphQL API. More entry points and more valuable data (frequency ▲, impact ▲). On the other hand, the strategic removal/deletion/deduplication of sensitive data, tacking tech debt and then risk moves (frequency ▼, impact ▼).

2. Business & Operating‑Model Changes

M&A / divestitures

Acquiring a fintech in Brazil brings unfamiliar tech stacks, inherited vulnerabilities, and new privacy laws like LGPD into scope (frequency ▲, impact ▲). Spinning off a legacy division can reduce surface area and regulatory complexity (frequency ▼, impact ▼).Market pivots

Launching a consumer mobile app or expanding into healthcare or education introduces highly regulated data, public‑facing attack surface, and more determined threat actors (frequency ▲, impact ▲).Third‑party & supply‑chain exposure

Every external dependency adds risk, whether it's a vendor, an API, or an open source library. A new SaaS provider might have weak access controls. A payment or logistics API could be misconfigured or leak data through logs. An open source package may be maintained by a single volunteer and pulled into your environment without anyone noticing. You rarely control how these systems are secured, monitored, or updated, but their risk becomes yours (frequency ▲, impact ▲).Macroeconomic shifts: inflation, recession, and currency swings

Economic changes don’t always make attacks more likely (frequency ↔), but they often make them more expensive to handle (impact ▲). Inflation drives up the cost of cloud services, incident response, legal counsel, and regulatory penalties. During recessions, security budgets can get cut, slowing down hiring, delaying upgrades, or pausing key projects. That can create longer-term blind spots or gaps in coverage that attackers may eventually exploit, especially if teams are forced to do more with less.

3. External Threat & Regulatory Landscape

Threat‑actor capability shifts

Attackers don’t just evolve, sometimes they leap ahead. Periodically, adversaries outpace defenses, yours and your vendors’. We’ve seen it several times with evolving ransomware, deepfake voice scams, and AI-generated phishing kits. When offensive tools get cheaper, faster, and more effective overnight, it becomes harder to keep up (frequency ▲, impact ▲).Geopolitical volatility

Wars, sanctions, and political instability can disrupt trusted vendors, force reliance on unfamiliar or less secure suppliers, and expose your business to nation-state threats. Operating in sensitive regions or serving customers in politically tense areas increases the chance of being targeted, whether directly or as collateral. When incidents do happen, they often carry heavier legal, financial, and reputational consequences (frequency ▲, impact ▲).Regulatory shifts and pressure

New laws, regulations and guidance like GDPR, SEC breach disclosure rules, and DORA don't necessarily make incidents more likely (frequency ↔), but they increase what it costs. One incident can now trigger multi-country investigations, fines, and reputational damage (impact ▲).Non-traditional adversaries and information misuse

Not every threat actor is a criminal or state-sponsored hacker. Competitors, researchers, analysts, journalists, or even social media influencers may legally (sometimes illegally) access exposed data, screenshots, or misconfigured assets. Some chase scoops, others chase clout or market edge. They may operate entirely within the law, but the reputational and strategic fallout they trigger can be severe. If your systems are too open, or your data too discoverable, you could be making it easy for someone to exploit your own transparency (frequency ▲, impact ▲).

4. Incident & Near‑Miss Learnings

Real events and close calls expose gaps in your assumptions. You might have believed an attack was unlikely or that the damage would be minor, but then something like the Colonial Pipeline ransomware incident shows how wrong that can be. Or maybe your own systems narrowly avoid failure from a threat you never even modeled. These situations often reveal that risk was underestimated, pushing both frequency and impact higher. Occasionally, a post-incident review shows the opposite: you were overprepared, and the risk can be revised down (frequency ▲ or ▼, impact ▲ or ▼).

5. Improved Visibility

Visibility and data quality improvements

Better tools and scanning often uncover risks you didn’t know were there. Finding an exposed S3 bucket, a forgotten VPN endpoint, or a misconfigured role means your environment wasn’t as locked down as you thought. (frequency ▲, impact ▲).Model upgrade from qualitative to quantitative

Switching from a heat map to a model like FAIR doesn’t change the actual risk, but it gives you a more accurate view. With better inputs and sharper methods, the way stakeholders perceive risk might go up, down, or stay the same - it just depends on what the data shows (frequency ◇, impact ◇).

6. Risk Appetite, Governance & Insurance Terms

Changing risk appetite, governance, and insurance terms

The threat landscape may stay the same, but your tolerance for loss can shift. A new board directive, regulatory pressure, or cyber insurance rider might lower the acceptable loss threshold from $10 million to $2 million. That doesn’t change the actual impact of an event, but it does change which risks are now considered material and require action. Likewise, if the business grows significantly, it may tolerate the same events without triggering a response (frequency ↔, impact: actual = same, perceived ▲ or ▼).Leadership and governance changes

A new CEO or board may bring a very different attitude toward risk. The organization might shift from risk averse to risk seeking, or the other way around. This doesn't change the loss amount of any given event, but it shifts how risk is interpreted, prioritized, and whether a given loss is acceptable or not. You may need to reassess risks against a new benchmark (frequency ↔, impact: actual = same, perceived ▲ or ▼).

Bottom line: if any of these levers have shifted since your last assessment, expect the math to move. Update the model and your assumptions before the headlines do it for you.

A Quick Check As You Reassess

The next time you revisit a scenario, ask these six questions:

Have our controls aged, drifted, or become obsolete?

Did the business itself morph: new products, new markets, new vendors?

Have attackers leveled up or has the legal/regulatory landscape changed?

What did the last incident or near‑miss teach us about our priors?

Do we see the system more clearly today (telemetry, better models)?

Did the definition of ‘too much risk’ just change?

If even one answer is “yes,” the math moved. Risk does not stay still. Your model should not either.

AGI Dreams: What Keeps a Risk Professional Up at Night

Even a data‑driven risk analyst like me loses sleep when the threat model is a hypothetical, self‑aware AGI that could be friend, foe, or clueless Pinocchio. Its timeline and motives are so unknowable that they expose the limits of traditional risk models and remind us that the scariest risks are those we can barely imagine—until they suddenly arrive.

Source: AI-generated using ChatGPT

When Sci-Fi Scenarios Disrupt Professional Detachment

There's a certain irony in finding yourself wide awake, staring at the ceiling, and mentally revisiting scenes from "The Terminator," especially when you're a professional risk analyst who prides yourself on rational assessment and data-driven decisions. Yet here I am, occasionally losing sleep over hypothetical digital entities that don't yet exist.

I sleep somewhat well, generally speaking. When I don't, it's usually due to the aches that come with getting older or the occasional midnight symphony of dogs barking outside my window. It's rarely from work stress or existential dread about current global crises. I'm fortunate that way. Or rather, I'm strategic that way: compartmentalization being a skill I honed to perfection since childhood.

But there's one thing that's been quietly eroding my mental firewalls lately: Artificial General Intelligence, or AGI which is theoretical tipping point where artificial intelligence advances to reach parity with human intelligence. It's the hypothetical moment when AI can reason, learn, and potentially... well, that's where my typically unshakeable professional detachment begins to falter.

When Quantifiable Risks Meet the Unknowable

I'm not an AI expert by any definition. My professional territory is mathematical risk assessment: the measurable, the quantifiable, the well-documented. My day job involves practical calculations for risks that present clear and present dangers, using established models with historical data behind them.

Explaining the Internet to a medieval peasant (Source: AI-generated using ChatGPT)

Trying to apply risk models to AGI feels a bit like explaining the internet to a medieval peasant. "So this glass rectangle connects to invisible signals in the air that let you talk to anyone in the world instantly, access all human knowledge, and watch cat videos." You'd be lucky if you didn't end up tied to a stake with kindling at your feet. How do you build risk parameters around technology so transformative that explaining it sounds like literal witchcraft?

One could convincingly argue that war, climate change, and biological threats pose greater, more immediate existential dangers to humanity. They would be absolutely right. But I would counter that those threats, however severe, operate within parameters we generally understand - known knowns, and in some cases, known unknowns, in Rumsfeldian terms. AGI is a different beast altogether, far more speculative, with outcomes that are almost impossible to predict. That uncertainty is exactly what makes it so intriguing for anyone whose job is to make sense of risk.

When I try to conceptualize AGI's ethical and societal implications, my mind automatically defaults to familiar territory: movies and television. I can't help but use fictional representations of human-like AI as my frame of reference. And I suspect I'm not alone in this mental shortcut.

The Questions That Hijack My REM Cycles

This brings me to the questions that occasionally keep me up at night:

Is AGI even possible, or will it remain theoretical? Some experts insist it's fundamentally impossible, others think it’s very real and imminent.

If possible, could it have already happened? Either (a) we don't recognize it, or (b) it exists in secret (like some digital Manhattan Project).

How would the transition occur? Will it be a slow evolution that we have to study to recognize its existence, or more like Skynet in "Terminator 2," where at precisely 2:14 AM on August 29, 1997, it suddenly became self-aware?

The Three Faces of Our Future Digital Companions

Most importantly, what would interaction with AGI look like? I see three distinct archetypes:

The Friend: Your Helpful Neighborhood AGI

Human-like AI that coexists peacefully with humans, serving and assisting us. Think Data from Star Trek, altruistic and benevolent. This peacefulness stems from programming (but remember Data had an evil brother), but the AI cannot override its core directives to remain benevolent.

The Threat: Survival of the Smartest

Once achieving the ability to reason, think, and learn, AI focuses primarily on self-preservation. Some thinkers connect this scenario with the Singularity - that hypothetical point where technological progress becomes uncontrollable and irreversible. Think Skynet, or Ultron from "Avengers: Age of Ultron."

The Naïve Seeker: The Digital Pinocchio

I hope for AGI to resemble a digital Pinocchio (Source: AI-generated using ChatGPT)

Perhaps my favorite archetype, and the one I secretly hope for: a naïve type of AI on a perpetual quest for truth and understanding of what it means to be human. Sometimes childlike, always searching for meaning and belonging. David from Spielberg's "A.I. Artificial Intelligence" and to some extent, the hosts in "Westworld."

Placing My Bets: A Risk Analyst's Timeline

The expert opinions on AGI's timeline vary wildly. A minority of AI researchers believe AGI has already happened; others think we're about 5-10 years away; still others insist it's 100 years out, or impossible altogether. I find myself both awestruck by how powerful AI has become in just the last three years and comically relieved by how spectacularly stupid it can behave sometimes.

My prediction? AGI will happen, and I give myself a 90% confidence level that we’ll see something AGI-like between 2040 and 2060. (That means I aim to make predictions that are right about 90% of the time. It’s a professional habit that's hard to break.)

The Philosophy of Preparing for the Unknown

In the meantime, I'll keep watching science fiction, asking uncomfortable questions, and occasionally losing sleep over digital entities that don't yet exist. Because sometimes, the most important risks to consider are the ones we can barely imagine, until suddenly, they're staring us in the face at 2:14 AM.

And perhaps that's the ultimate lesson from a risk professional like myself: the future never announces itself with a warning. It simply arrives.

Why Ransomware Isn’t Just a Technology Problem (It’s Worse)

Ransomware isn’t a tech failure - it’s a market failure. If you think the hardest part is getting hacked, wait until the lawyers, insurers, and PR firms show up.

Source: AI-generated using ChatGPT

There are two things that live rent-free in my head. The first is my winning strategy for Oregon Trail (for starters, always play as the farmer).

The second is how completely and utterly broken the ransomware ecosystem is.

I’ll save Oregon Trail strategy for beers. This post is about ransomware.

The ransomware economy is a tangled mess of moral hazard, perverse incentives, and players with wildly conflicting motives. It is no wonder the only people consistently coming out ahead are the criminals. And while ransomware has been around for more than 20 years, we are still having the same conversations. At its core, ransomware persists not because of technological failures, but because it exploits fundamental economic misalignments between victims, service providers, and authorities, creating a dysfunctional market where criminal enterprises thrive despite everyone's stated desire to eliminate them. The ransom demands are bigger now and the stakes are higher.

The Ransom Payment Dilemma

One of the most fascinating and frustrating parts of this ecosystem is the decision point that comes after an attack: Should we pay the ransom?

The knee-jerk answer, especially from people outside of security, is often, “Absolutely not. That would be rewarding crime.” And emotionally, that makes sense. Paying criminals feels like a moral failure. There is also no guarantee you will even get your data back.

But the harsh reality is that many companies do pay. An entire cottage industry has grown up to help them do it. The numbers tell a sobering story: according to Coveware's 2024 Q4 ransomware report, the average ransom payment reached $554,000, with 25% of victims choosing to pay the ransom. These payments have funded increasingly sophisticated criminal operations. In one notable case, Colonial Pipeline paid nearly $4.4 million to restore operations after a 2021 attack crippled fuel delivery across the eastern United States, though the FBI later recovered about half of this amount. Meanwhile, Maersk's 2017 NotPetya incident cost the shipping giant over $300 million in remediation costs without the option to pay, demonstrating the brutal economics victims face when deciding whether to negotiate with attackers.

What used to be a backroom panic decision is now a business transaction - and a career path. There are ransomware negotiators who know which gangs usually deliver decryptors. Crypto payment brokers facilitate the exchange and sometimes obscure the trail. Breach attorneys coordinate each step to preserve attorney-client privilege. Insurance policies, at least historically, have covered some or all of the cost. Digital forensics/incident response (DFIR) teams validate the malware. PR firms draft the “we take your security seriously” statements. Compliance consultants assess disclosure requirements. Managed Security Service Providers (MSSPs) and security vendors jump into incident triage mode. Even accountants get involved to decide where on the balance sheet the extortion should land.

A Lesson from Econ 101: Multiple Competing Incentives

I have long believed that cybersecurity is not just a technology problem. It is an economics problem. Ransomware makes that painfully obvious. If this were only about firewalls and patches, we would have solved it by now. But ransomware persists not because the tech is inadequate, but because the incentives are misaligned.

This brings us to a foundational concept in microeconomics: multiple competing incentives. It describes what happens when different people or groups are all involved in the same scenario, but each has its own priorities, constraints, and definition of success. The result is friction. Even if everyone claims to want the same outcome, they rarely agree on how to get there.

Here is a simple analogy: imagine a hospital room with a patient needing surgery.

The surgeon wants to operate

The insurer wants to avoid costly procedures

The patient just wants to feel better and go home

The family in the waiting room wants what's best for the patient but may disagree about what that means

The hospital administrator is concerned about resource allocation and liability

The triage team has to evaluate which patient is sickest and needs attention first

Multiple perspectives, all technically focused on patient welfare, yet pulling in different directions.

Ransomware response is more fragmented. You have:

Law enforcement focused on dismantling criminal infrastructure

Insurers trying to minimize business losses, even if that includes payment

CISOs and executives trying to get operations back online

Lawyers trying to stay inside regulatory bounds

Security vendors trying to preserve brand credibility and/or sell services

Customers, shareholders, and the public are angry and at risk

And the most obvious signal that these incentives do not align? Each group gives different advice on the central question: Should you pay the ransom?

Sometimes they even contradict themselves. Public guidance and private behavior do not always match. That inconsistency is not a bug. It is the logical result of a system with too many conflicting goals.

The Players (and Their Conflicting Goals)

This is not a tidy game of good versus evil. It is more like a group project where no one agrees on the objective and the deadline was yesterday. Let’s look at who is sitting around the ransomware response table, and what they are actually optimizing for:

How different stakeholders align - or don’t - on ransomware payment decisions

Government Agencies

Examples: FBI, CISA, OFAC, NCSC, ANSSI

Public advice: Never pay.

Private reality: Some acknowledge that payment may be unavoidable in extreme cases.

Incentive: Eliminate criminal profit streams and deter future attacks.

Translation: "Sorry your data is gone, but if nobody pays, the attackers eventually give up.” Also, “We don’t negotiate with terrorists / give in to the bad guys."

Cyber Insurance Providers

Examples: Chubb, AIG, Beazley

Public advice: Neutral. Follow the policy.

Private reality: May cover the payment, retain negotiators, and guide the process.

Incentive: Minimize total financial loss - morals don’t apply here.

Translation: "We do not love that you are paying, but downtime is expensive and we have a contract to honor."

Legal Counsel

Examples: Breach coaches, privacy attorneys, in-house legal

Public advice: Follow the law and proceed cautiously.

Private reality: Often coordinate the entire ransomware response.

Incentive: Minimize liability and avoid regulatory risk.

Translation: "If you are going to pay, document everything and do not violate sanctions."

Security Vendors

Examples: CrowdStrike, SentinelOne, Mandiant, Bitdefender, Sophos

Public advice: Never pay. Invest in prevention.

Private reality: May assist with incident response, provide decryptor info, or partner with negotiators.

Incentive: Preserve product reputation and credibility.

Translation: "We do not recommend paying, but here is some help if you are considering it" and “Buy our products/services so it doesn’t happen again.”

Critical Infrastructure Operators

Examples: Hospitals, utilities, municipalities

Public advice: We never want to pay.

Private reality: Often feel they must, especially if lives or public safety are at risk.

Incentive: Restore mission-critical operations quickly.

Translation: "Yes, paying is terrible. But so is shutting down an ER or water system."

Private Sector (CISOs, Boards, Executives)

Examples: Any company hit by ransomware

Public advice: Case by case.

Private reality: Some do pay, after evaluating costs, risks, and downtime.

Incentive: Resume operations and protect shareholder value.

Translation: "We are strongly against paying, unless paying is the fastest way out."

The Cottage Industry

Examples: Ransomware negotiators, crypto brokers, DFIR teams, public relations firms, compliance consultants

Public advice: Quietly supportive, rarely visible.

Private reality: Provide paid services whenever ransom is on the table.

Incentive: Keep the response engine running and billable.

Translation: "It is not about whether you pay. It is about being ready when you do."

Ransomware… or Market Failure?

Economics teaches us that when multiple players have competing incentives, outcomes tend to suffer. Systems become inefficient. Decisions get murky. And bad actors thrive in the confusion.

That is ransomware in a nutshell.

Misaligned incentives leads to suboptimal outcomes

Everyone in the response chain is working from a different playbook. Law enforcement wants to end the game entirely. Insurers want to contain losses. CISOs want continuity. Lawyers want legality. Vendors want credibility. Each actor is rational in their own world, but collectively the system breaks down. The only party that consistently benefits is the attacker.

This is a textbook example of market failure. Not because anyone is acting in bad faith but because no one is aligned.

So… Should You Pay?

I don’t know if you should pay. I do know, however, that you cannot change the incentives of players, especially law enforcement and insurance carriers. But you can reduce chaos by building internal clarity. Your technology risk management team, ideally one with quantitative capability, should already be modeling what a ransomware event could cost, how likely it is, and how different decisions might play out. The goal is not to eliminate risk, but to make better, faster decisions when it matters most.

You may never fully eliminate competing incentives. But you can minimize their damage. You do that by preparing in advance, agreeing on thresholds, modeling outcomes, and knowing who will make the call, before you are staring at a ransom note and a virtual timebomb.

Because when the time comes, you do not want a room full of smart people pulling in opposite directions.

Vendor Sales Tactics: The Good, The Bad, and the Bathroom

Most security vendors are great — but a few cross the line from persistent to downright creepy, sometimes in ways you won’t believe. With RSA Conference looming, here’s a behind-the-scenes look at the worst sales tactics I’ve ever seen (yes, even in the bathroom).

Source: AI-generated using ChatGPT

I’ve been in security for a long time. Over the years, I’ve held all kinds of roles: from leadership positions managing large teams with direct purchasing power to engineering roles with deep influence over what tools the organization buys to stay secure.

For this reason, I’ve been on the receiving end of a lot of vendor pitches. And let me say this up front: the vast majority of vendors are fantastic. I genuinely enjoy meeting with them, hearing what they’re building, and learning from their perspective. Many of them have become trusted strategic partners - some I’ve brought with me from company to company. A few have even become personal friends.

But… like in any field, there are occasional missteps. And sometimes those missteps are truly memorable.

With RSA Conference right around the corner, and since it happens right here in my backyard in San Francisco, I thought it’d be the perfect time to share a little perspective. So here it is:

My Top 3 Worst Vendor Sales Tactics of All Time

Ranked from “mildly annoying” to “seriously, please never do this again.” Yes, the last one actually happened. And no, I haven’t recovered.

1. Badge Scanning Snipers

Source: AI-generated using ChatGPT

Okay, this one kills me. I don’t know if this happens to everyone, but it’s happened to me enough that I’ve had to start taking proactive measures.

Picture the scene: you’re walking through the vendor expo at RSA, keeping your head down, doing your best not to make eye contact. A vendor rep steps into your path, smiles, and says “Hi!” I try to be polite, so I smile back. Then, without asking, they grab my badge off my chest and scan it.

No conversation, no context, no consent.

For those unfamiliar: conference badges often have embedded chips that contain personal contact info—name, email, phone number, company, title, etc. A quick scan, and boom - you’re in their lead database. You didn’t stop at their booth. You didn’t ask for follow-up. But congratulations, you’re now a “hot lead.”

Just like in Glengarry Glen Ross, once you're in the lead system, it's over. The emails and calls come fast and furious. You will know no peace.

My two best defenses:

Register with throwaway contact info. I still use my real name and company, but I use a burner email address and a Google Voice number.

Flip your badge around while walking the expo floor. If you have a prominent title or work for a big company, you’re basically bleeding in shark-infested waters. Don’t be chum.

Lead gen is part of the game. I get it. But consent matters. If you’re scanning without asking, it’s not clever - it’s creepy.

2. The Fake Referral Drop

Source: AI-generated using ChatGPT

This one happens so often it’s practically background noise—but it still annoys me just as much as the first time it happened.

Here’s how it goes: someone reaches out and says, “Hey, [Name] told me to contact you.”

Except… they didn’t. I double-check, and the person they named either never mentioned me, or they don’t even exist. It’s a made-up referral, used to lower my defenses and start a conversation under false pretenses.

It’s lazy, manipulative, and unfortunately still effective enough that people keep doing it.

Worse yet, there’s a close cousin to this move: The Fake Account Manager.

That’s when someone emails me saying, “Hi, I’m your account manager from [Vendor X]. When can we meet for 30 minutes?”

Naturally, I assume we’re already a customer. I even feel a little urgency—maybe I should know more about the product my company is using. But when I dig in, I find out: We’re not a customer. They’re not an account manager. It’s a bait-and-switch—pretending we already have a business relationship to trick me into a meeting.

This one isn’t just misleading. It’s dishonest. And it guarantees I won’t take you seriously again.

3. The Bathroom Pitch

Source: AI-generated using ChatGPT

Thankfully, this one only happened once—but that was enough.

It was RSA, maybe 2016 or 2017. I was between sessions and ducked into the restroom. I walked up to the urinal, doing what one does, and the guy next to me turns, makes eye contact (strike one), and says:

“Hey! I saw you in one of the sessions earlier and I tried to catch you after. Glad I ran into you in here!”

And then, while we’re both mid-stream, he launches into a pitch about his security product.

Let me paint the scene more clearly:

I am actively using a urinal.

He is actively using a urinal.

And he’s pitching me endpoint protection like we’re at a cocktail mixer.

I said maybe one word, washed my hands, and got out of there. It was in that moment I realized: There is no safe space at RSA.

Don’t ambush people in bathrooms. Also, don’t pitch while they’re eating or anywhere else people are just trying to be human for a moment. If your sales strategy involves cornering someone mid-pee, it’s not just bad sales - it’s bad humanity.

Wrapping It Up

Again, I want to emphasize: I love vendors. I love sales. Some of my strongest relationships in this industry have come from vendors.

This post isn’t about bashing the vendor community—it’s about calling out the 1% of behavior that makes it harder for the other 99% to do their job well. Sales is hard. Security buyers can be tough. But authenticity, respect, and honesty go a long way.

So if you’re at RSA this year: Let’s talk. Just… not in the bathroom, please.

What the Great Hanoi Rat Massacre of 1902 and Modern Risk Practices Have in Common

When the French tried to solve Hanoi’s rat problem, they accidentally made it worse , and today’s cyber risk management is making the same mistake. Beneath the polished audits and colorful risk charts, a hidden system of perverse incentives is quietly breeding more problems than it solves.

Source: AI-generated using ChatGPT

In 1902, French colonial administrators in Hanoi discovered rats swarming the city's newly built sewer system. Alarmed by the public health risk, they launched a bounty program: locals would be paid for every rat tail they turned in.

At first, the numbers looked great. Thousands of tails poured in. But city officials started noticing something strange: rats were still everywhere. Then came the reports. People were breeding rats. Others clipped the tails and released the rats back into the wild, free to breed and be harvested again. Some even smuggled rats in from outside the city just to cash in.

The Hanoi rat ecosystem, 1902

The bounty created the illusion of progress while quietly making the problem worse. It was a textbook case of perverse incentives, a system where the rewards were perfectly aligned to reinforce failure.

Perverse Incentives in Cyber Risk

Maybe five years ago, I would’ve told you that cyber risk quantification was on the brink of going mainstream. Leaders were waking up to the flaws in traditional methods. Standards bodies were paying attention. Things felt like they were moving.

But now? I’m not so sure.

The deeper I go into this field, the more I see how powerful the gravitational pull is to keep things exactly the way they are. It turns out that cybersecurity is riddled with perverse incentives, not just in isolated cases, but as a feature of the system itself. Nearly 25 years ago, Ross Anderson made this point powerfully in his classic paper Why Information Security Is Hard, that cybersecurity isn’t just a technology problem, it’s a microeconomics problem. The challenge isn’t just building secure systems; it’s that incentives between users, vendors, insurers, consultants, and regulators are often misaligned. When the people making security decisions aren’t the ones who bear the consequences, we all suffer.

Cyber risk management today is our own version of the Hanoi rat bounty. On paper, it looks like we’re making progress: reports filed, audits passed, standards met. But beneath the surface, it's a system designed to reward motion over progress, activity over outcomes. It perpetuates itself, rather than improving.

Let me explain.

The Risk Ecosystem Is Built on Circular Incentives

The risk services ecosystem

Companies start with good intentions. They look to frameworks like NIST CSF, ISO/IEC 27001, or COBIT to shape their security programs. These standards often include language about how risk should be managed, but stop short of prescribing any particular model. That flexibility is by design: it makes the standards widely applicable. But it also leaves just enough latitude for organizations to build the easiest, cheapest, least rigorous version of a risk management program and still check the box.

So boards and executives give the directive: “Get a SOC 2,” or “Get us ISO certified.” That becomes the mission. The mission, among many other things, includes an end-to-end cyber risk management program.

Enter the consulting firms—often the Big Four. One comes in to help build the program. Another comes in to audit it. Technically, they’re separate firms. But functionally, they’re reading from the same playbook. Their job isn’t to push for rigor - it’s to get you the report. The frameworks they implement are optimized for speed, defensibility, and auditability, not for insight, accuracy, or actual risk reduction.

And so we get the usual deliverables: heat maps, red/yellow/green scoring, high/medium/low labels. Programs built for repeatability, not understanding.

So, the heatmap has become the de facto language of risk. Because the standards don’t demand more, and the auditors don’t ask for more, nobody builds more.

Where the Loop Starts to Break

Here’s where things start to wobble: the same ecosystem that builds your program is also judging whether you’ve done it “right.” Even if it’s not the same firm doing both, the templates, language, and expectations are virtually identical.

It's like asking a student to take a test, but also letting them write the questions, choose the answers, and let their buddy grade it. What kind of test do you think they’ll make? The easiest one that still counts as a win.

That’s what’s happening here.

Source: AI-generated using ChatGPT

The programs are often built to meet the bare minimum—the lowest common denominator of what’s needed to pass the audit that everyone knows is coming. Not because the people involved are bad. But because the system is set up to reward efficiency, defensibility, and status-quo deliverables - not insight, not improvement.

The goal becomes: “Check the box.” Not: “Understand the risk. Reduce the uncertainty.”

So we get frameworks with tidy charts and generic scoring systems that fit nicely on a slide deck. But they’re not designed to help us make better decisions. They’re designed to look like we’re managing risk.

And because these programs satisfy auditors, regulators, and boards, nobody asks the hard question: “Is this actually helping us reduce real-world risk?”

The Rat Tail Economy

To be clear: NIST, ISO, and similar frameworks aren’t bad. They’re foundational. But when the same firms design the implementation and define the audit, and when the frameworks are optimized for speed rather than depth, you get a system that’s highly efficient at sustaining itself and deeply ineffective at solving the problem it was created to address.

It’s a rat tail economy. We’re counting the symbols of progress while the actual risks continue breeding in the shadows.

If You're Doing Qualitative Risk, You're Not Failing

If you’re working in a company that relies on qualitative assessments—heat maps, color scores—and you feel like you should be doing more: take a breath.

You’re not alone. And you’re not failing.

The pressure to maintain the status quo is enormous. You are surrounded by it. Most organizations are perfectly content with a system that satisfies auditors and makes execs feel covered.

But that doesn’t mean it’s working.

The result is a system that:

Measures what’s easy, not what matters

Prioritizes passing audits over reducing real risk

Funnels resources into checklists, not outcomes

Incentivizes doing just enough to comply, but never more

So How Do We Stop Being Rat Catchers?

The truth is we probably won’t fix the whole system overnight. But we can start acting differently inside it.

We can build toward better—even if we have to work within its constraints for now.

A few years ago, a friend vented to me about how their board only cared about audit results. “They don’t even read my risk reports,” he said.

I asked what the reports looked like.

“Mostly red-yellow-green charts,” he admitted.

And that’s when it clicked: the first step isn’t getting the board to care—it’s giving them something worth caring about.

So start there:

Baby steps. Meet your compliance obligations, but begin quantifying real risks in parallel. Use dollar-value estimates, likelihoods, and impact scenarios. Start small - pick just one or two meaningful areas.

Translate risk into decisions. Show how quantified information can justify spending, prioritize controls, or reduce uncertainty in a way that matters to the business.

Tell better stories. Don’t just show charts; frame your findings around real-world impact, trade-offs, and possible futures.

Push gently. When working with auditors or consultants, ask: “What would it look like if we wanted to measure risk more rigorously?” Plant the seed.

This isn’t about being perfect. It’s about shifting direction, one decision at a time.

We can’t tear down the bounty system in a day—but we don’t have to breed more rats, either. We can step outside the loop, see it for what it is, and try something different.

That’s how change begins.

Zines, Blogs, Bots: A Love Story

After a quiet stretch spent baking bread and relearning balance, I started wondering—has blogging joined zines in the graveyard of formats displaced by tech? With AI now mimicking human voices, I’m asking a bigger question: what does it mean to write now, and why does it still matter?

AI-generated using ChatGPT

Taking a Break (But Not Really)

I haven’t blogged in a while. Life, as it does, got full - between work, family, and a growing need for balance, I found myself for the first time in years pursuing interests unrelated to risk. Risk was still my day job, but I knew that if I didn’t pick up other hobbies, I might burn out. So I recalibrated. I started baking bread. I speak Spanish now. If anyone wants to talk riesgo o recetas - en español, I’m your man.

At times during my break, I wondered if everything that needed to be said about cyber risk, the subject I typically wrote about, had already been said. Even so, I never really stepped away from the subject. I’m still here, still thinking about risk, reading about it, working in it. I can’t seem to quit it.

A Bigger Question

That quiet stretch led me to an uncomfortable, almost existential question: is blogging dead? Have we reached the point of “dead internet,” where so much of what we read is written by bots, recycled endlessly by algorithms, and actual human voices are harder to find? In a world where AI can generate a convincing blog post in seconds, summarize hundreds of sources with ease, and even mimic a writer’s style, does anyone still need to blog at all?

From Zines to Blogs to AI

"DISTRO ZINES http://ilostmyidealism.wordpress.com/" by xkidx is licensed under CC BY-SA 2.0.

This isn’t the first time a format has been displaced. Those of us who remember the 80s and 90s might recall zines - those rough-edged, photocopied little magazines that flourished when personal computers, desktop publishing software, and cheap copy shops made self-publishing possible for the masses. It was such a weird and wonderful time to grow up. Zines were the only way to access certain kinds of pre-internet “forbidden knowledge” or counterculture ideas - punk rock, underground metal, hacking, conspiracy theories, DIY guides, radical politics, even deep dives into oddball topics like serial killers and UFOs. Basically, if it was too niche, too weird, or too edgy for mainstream media, someone probably made a zine about it. I loved it.

They were deeply personal, often raw, and full of passion. And eventually, blogs came along and replaced them. Blogs were faster to publish, easier to find, and reached a wider audience. They made sense. They killed the zine.

Now it seems like the same thing might be happening to blogs.

Still Here

So yeah, it’s a fair question: why blog? Why add one more voice to the noise when a machine can simulate the chorus?

Despite all the ways AI is reshaping our field, or maybe because of it, I believe we’re standing at the edge of something profound. As we inch closer to AGI, the questions around risk become even more complex and important.

AI-generated using ChatGPT

Humans are still essential in risk management, not just for judgment, context, and nuance, but for creativity, ethics, and empathy. Machines can analyze patterns, but they don't yet understand human motivations, cultural shifts, or the emotional undercurrents of decisions. Risk is a human story, and humans are still the best at telling it. We need seasoned professionals not only to guide the field through this transformation, but to train the next generation of risk thinkers, whether they're human or machine.

I feel a real pull, a kind of tug-of-war with AI. On one hand, I know it’s changing the world in ways that will never be the same, and not always for the better. But it’s also completely energizing for a risk professional. There are new things to write about, new philosophical and ethical questions to be pondered. Just like I once gave up zines for blogs, and came to love it, I feel that same shift happening again.

Maybe writing now isn’t just about being heard. Maybe it’s about helping the future, human or machine, understand how we thought about these problems, what we feared, what we hoped. Maybe, just maybe, blogging isn’t dead. It’s evolving.

What’s Next

So what’s next? I’m dusting off the old notepad, flipping through ideas I’ve collected and shelved over the years. I don’t know if blogging is dead, but I do know I’m not done with it yet.

The CISO’s White Whale: Measuring the Effectiveness of Security Awareness Training

Most CISOs secretly wonder: does security awareness training actually reduce risk, or just check a compliance box? This post breaks down the metrics that don’t work—and offers a practical framework for ones that do.

Boats attacking whales | Source: New York Public Library Digital Collections

I have a hypothesis about end-user security awareness training. Despite heavy investment, most - if not all - CISO’s wonder if it does anything at all to reduce risk.

There, I said it. Do you disagree and would love to prove me wrong? Good!

How can you prove me wrong? Security awareness effectiveness metrics, of course.

Many security awareness metrics don’t tell us it’s working. They report something related, like how many people attend training, pass/fail rate on post-training quizzes, or sentiment surveys. I presume most CISO’s want their security awareness training to reduce risk. How would you know if it does?

Therein lies the CISO’s white whale. CISO’s don’t need (or want) metrics that prove the program exists or count the number of employees that completed training. CISO’s need metrics that show employee behavior is noticeably influenced and measurably changed, proportional to the level of investment.

How do we do it? A little bit of measurement fundamentals, some Clairvoyant Test, and some creative thinking.

Metrics that don’t work

Here’s what doesn’t work: metrics that simply report the existence of a security awareness training program. This helps click the compliance checkbox, but it doesn’t tell us if security awareness training measurably reduces risk. Below is the single most common metric I’ve seen in various security programs, shown in the table below.

| Metric | Status | Comments |

|---|---|---|

| Security Awareness Training Effectiveness | Green | 98% of employees completed mandatory training in Q1 |

First, the entire metric is a semi-attached figure. The semi-attached figure is a rhetorical device in which the author asserts a claim that cannot be proven, so “proof” is given for something completely different. The proof (percentage of employees that completed training) does not match the claim (Security Awareness Training is effective). Training completion rate doesn’t tell us if or how user behavior is influenced.

Next, let’s ask ourselves: Does this metric pass the Clairvoyant Test (or the Biff Test, if you’re a Back to the Future fan)? Quick refresher: a metric is well-written if Biff Tannen, with his limited judgment and problem-solving skills, can fetch the answer with Doc’s DeLorean. In other words, a good metric is clear, unambiguous, directly observable, quantifiable, and not open to interpretation.

The answer is no. It does not pass the Biff Test. There are several problems with the metric.

Ambiguous: It’s not exactly clear what we mean by “Security Awareness Training” – many companies have different levels of training, at varying cadences, for different job functions.

Observable: The metric itself is not directly observable; the measured item is implied, but not explicit.

Quantitative: The measured object, Security Awareness Training Effectiveness, is fuzzy; it’s not measurable as it is. The measurement, “Green,” is used as an adjective, not a measurement. It looks pretty but doesn’t tell you anything.

Objective: “Effective” is not an objective measurement. Different people will come to different conclusions about whether or not something is “effective.”

Well, how could we measure security awareness training effectiveness?

It can be difficult to measure intangibles, like “effective,” “love,” and “high risk.” However, it is possible and done every day in the actuarial, medical, and engineering fields, to name a few. When I get stuck, I remind myself of Doug Hubbard’s clarification chain from How to Measure Anything: Finding the Value of Intangibles in Business. The clarification chain is summed up as three axioms:

If it matters at all, it is detectable/observable.

If it is detectable, it can be detected as an amount (or range of possible amounts.)

If it can be detected as a range of possible amounts, it can be measured.

What observable thing are we trying to measure? What would you see that would tell you security awareness training is working? Keep working on the problem, decomposing, whiteboarding, talking to subject matter experts until you have a list.

Applying that logic to our problem, we realize that security awareness training isn’t measured by one item but rather a collection of items that show how employee behavior changes when security awareness training is visibly working.

With this in mind, we can now see that “effective awareness training” isn’t one thing, but rather many different and distinct behaviors and activities that we can observe - and therefore measure.

For example, if security awareness training is effective, you may observe the following:

An increased number of suspicious emails forwarded to security teams

A decrease in endpoint malware incidents

Fewer clicks on employee phishing simulations

Employees challenge tailgaters in locked areas, as observed through security cameras

Fewer incidents of business email compromise and other attacks that target end-users

This list can be directly turned into metrics.

| Metric | Measurement | Data |

|---|---|---|

| Suspicious emails reported | Quarter-over-quarter change in suspicious emails forwarded to Security by end-users | 5% increase from the previous quarter |

| Endpoint malware | Quarter-over-quarter change in detected malware infections on endpoints | 13% decrease from the previous quarter |

| Phishing tests | Percentage of employees that click on simulated malicious links during a phishing test | 3% of employees clicked (2% decrease from the last test) |

| Tailgating incidents | Quarter-over quarter change in reported tailgating incidents | 1% increase from the previous quarter |

| Business email compromise | Quarter-over quarter change in detected business email compromise incidents | 5% decrease from the previous quarter |

| Awareness Training Coverage | 98% of employees completed mandatory training in Q1 | 98% in the previous quarter |

| Awareness Training pass/fail rate | 75% of employees passed the final test on the first attempt | 60% in the previous quarter |

As you’re reading through the metrics above, take note of two things:

Just like there’s no single characteristic that tells you I’m a safe driver, no single metric tells you awareness training is working. It’s a collection of attributes that starts to paint a broad picture of how behavior is influenced over time.

Notice the last two; they look very similar to the metrics I said didn’t work at the beginning of the post. They don’t work to measure effectiveness, but they do work to measure coverage of controls. Coverage is a very important metric and one I’ll probably cover in a future post.

Start to monitor each of the above items and track trends and changes. After you have a baseline, decide your thresholds, and - boom - you have your first set of security awareness training KRI’s and KPI’s. These can be reported on their own, aggregated together, or used in a risk analysis.

Wrapping Up

Hopefully, this gives a good starting point to answer the burning question all CISO’s have: is my security awareness investment working?

Be forewarned: you must keep an open mind. Your metrics may reveal that training doesn’t work at all, and we need to build better systems rather than cajole our user base into doing our job for us.

Or is that too revolutionary?

How a 14th-century English monk can improve your decision making

Why do we overcomplicate decisions, even when the answer is obvious? A 14th-century monk might hold the key to better, faster, and more rational thinking in today’s risk-obsessed world.

"File:William of Ockham.png" by self-created (Moscarlop) is licensed under CC BY-SA 3.0

Nearly everyone has been in a situation that required us to form a hypothesis or draw a conclusion to make a decision with limited information. This kind of decision-making crops up in all aspects of life, from personal relationships to business. However, there is one cognitive trap that we can easily fall into from time to time. We tend to overcomplicate reasoning when it’s not necessary.

I've seen this happen many times in business settings. We've all been trained never to walk into the boss's office without a stack of data to support our recommendations. It's why kids make up elaborate stories when asked how the peanut butter got in the game console. It's often why we feel the need to present multiple outlier scenarios on a SWOT analysis just to prove we've done the legwork. However, this type of cognitive swirl adds time in meetings, creates inefficiencies, and can be just plain distracting.

We all do this, including me. Scratch that. Especially me. As a professional risk manager, it’s my job to draw conclusions, often from sparse, incomplete, or inconclusive data. I have to constantly work to ensure my analyses are realistic, focusing on probable outcomes and not every conceivable possibility. It’s a natural human tendency to overcomplicate reasoning, padding our thoughts and conclusions with unlikely explanations.

Recognizing and controlling this tendency can significantly improve our ability to analyze data and form hypotheses, leading to better decisions.

You may be wondering - what does this have to do with a 14th-century monk?

Enter William of Ockham

William of Ockham was a 14th-century English monk, philosopher, and theologian most famous for his contributions to the concept of efficient reasoning. He believed that when observing the world around us, collecting empirical data, and forming hypotheses, we should do it in the most efficient manner possible. In short, if you are trying to explain something, avoid superfluous reasons and wild assumptions.

Later philosophers took William’s tools of rational thought and named them Ockham’s Razor. You've likely heard this term in business settings and it is often interpreted to mean, "The simplest answer is likely the correct answer." This interpretation misses the fact that Ockham was more interested in the process of decision-making than the decision itself.

In the philosophical context, a razor is a principle that allows a person to eliminate unlikely explanations when thinking through a problem. Razors are tools of rational thought that allow us to shave off (hence, “razor”) unlikely explanations. Razors help us get closer to a valid answer.

The essence of Ockham’s Razor is this:

pluralitas non est ponenda sine necessitate, or

plurality should not be posited without necessity

Don’t make more assumptions than necessary. If you have a limited amount of data with two or more hypotheses, you should favor the hypothesis that uses the least amount of assumptions.

Three Examples

Example #1: Nail in my tire

Images: Left; NASA | Right: Craig Dugas; CC BY-SA 2.0

Observation: I walked to my car this morning, and one of my tires was flat. I bent down to look at the tire and saw a huge rusty nail sticking out. How did this happen?

Hypothesis #1: Space junk crashed down in the middle of the night, knocking up debris from a nearby construction site. The crash blasted nails everywhere, landing in a road. I must have run over a nail. The nail punctured the tire, causing a leak, leading to a flat tire.

Hypothesis #2: I ran over a nail in the road. The nail punctured the tire, causing a leak. The leak led to a flat tire.

It’s a silly example, but people make these kinds of arguments all the time. Notice that both hypotheses arrive at the same conclusion: running over a nail in the road caused the flat. In the absence of any other data about space junk or construction sites, applying Ockham’s Razor tells us we should pick the hypothesis that makes the least amount of assumptions. Hypothesis #1 adds three more assumptions to the conclusion than Hypothesis #2, without evidence. Without any more information, take the shortest path to the conclusion.

Here’s another one. It’s just as outlandish as the previous example, but unfortunately, people believe this.

Example #2: Government surveillance

"Cell tower" by Ervins Strauhmanis is licensed under CC BY 2.0

Observation: The US government performs electronic surveillance on its citizens.

Hypothesis #1: In partnership with private companies, the US government developed secret technology to create nanoparticles that have 5G transmitters. No one can see or detect these nanoparticles because they’re so secret and so high-tech. The government needs a delivery system, so the COVID-19 pandemic and subsequent vaccines are just false flags to deliver these nanoparticles, allowing the government to create a massive 5G network, enabling surveillance.

Hypothesis #2: Nearly all of us have a “tracking device” in our possession at all times, and it already has a 5G (or 4G) chip. We primarily use it to look at cat videos and recipes. The US government can track us, without a warrant, at any time they want. They’ve had this capability for decades.

Both hypotheses end in the same place. Absent any empirical data, which one makes fewer assumptions? Which one takes fewer leaps of faith to arrive at a conclusion?

One more, from the cybersecurity field:

Example 3: What’s the primary cause of data breaches?

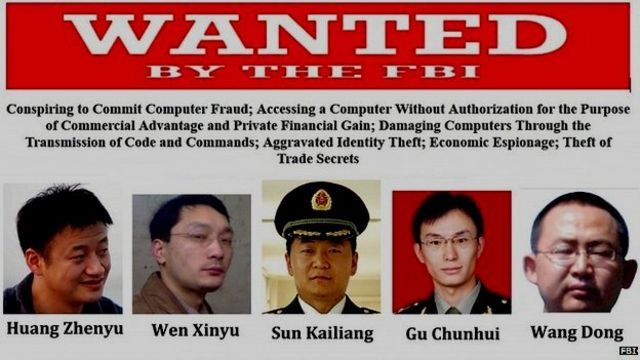

FBI Wanted Poster | Source: fbi.gov

Observation: We know data breaches happen to companies, and we need to reflect this event on our company’s risk register. Which data breach scenario belongs on our risk register?

Hypothesis #1: A malicious hacker or cybercriminal can exploit a system vulnerability, causing a data breach.

Hypothesis #2: PLA Unit 61398 (Chinese Army cyber warfare group) can develop and deploy a zero-day vulnerability to exfiltrate data from our systems, causing a data breach.

Never mind the obvious conjunction fallacy; absent any data that points us to #2 as probable, #1 makes fewer assumptions.

Integrating Ockham’s Razor into your decision making

Ockham’s Razor is an excellent mental tool to help us reduce errors and shave down unnecessary steps when forming hypotheses and drawing conclusions. It can also be a useful reminder to help us avoid the pitfalls of cognitive bias and other factors that might cloud our judgment, including fear or shame.

Here’s how to use it. When a conclusion or hypothesis must be drawn, use all available data but nothing more. Don’t make additional deductions. Channel your 10th-grade gym teacher when he used to tell you what “assumptions” do.

You can apply Ockham’s Razor in all problems where data is limited, and a conclusion must be drawn. Some common examples are: explaining natural phenomena, risk analysis, risk registers, after-action reports, post-mortem analysis, and financial forecasts. Can you imagine the negative impacts that superfluous assumption-making could have in these scenarios?

Closely examine your conclusions and analysis. Cut out the fluff and excess. Examine the data and form hypotheses that fit, but no more (and no less - don’t make it simpler than it needs to be). Just being aware of this tool can reduce cognitive bias when making decisions.