Risk Mythbusters: We need actuarial tables to quantify cyber risk

Think you need actuarial tables to quantify cyber risk? You don’t — actuaries have been pricing rare, high-uncertainty risks for centuries using imperfect data, expert judgment, and common sense, and so can you.

Risk management pioneers: The New Lloyd's Coffee House, Pope's Head Alley, London

The auditor stared blankly at me, waiting for me to finish speaking. Sensing a pause, he declared, “Well, actually, it’s not possible to quantify cyber risk. You don’t have cyber actuarial tables.” If I had a dollar for every time I heard that… you know how the rest goes.

There are many myths about cyber risk quantification that have become so common, they border on urban legend. The idea that we need vast and near-perfect historical data is a compelling and persistent argument, enough to discourage all the but the most determined of risk analysts. Here’s the flaw in that argument: actuarial science is a varied and vast discipline, selling insurance on everything from automobile accidents to alien abduction - many of which do not have actuarial tables or even historical data. Waiting for “perfect” historical data is a fruitless exercise and will prevent the analyst from using the data at hand, no matter how sparse or flawed, to drive better decisions.

Insurance without actuarial tables

Many contemporary insurance products, such as car, house, fire, and life have rich historical data today. However, many insurance products have for decades - in some cases, centuries - been issued without historical data, actuarial tables, or even good information. For those still incredulous, consider the following examples:

Auto insurance: Issuing auto insurance was unheard of when the first policy was issued in 1898. Companies only insured horse-drawn carriages up to that point, and actuaries used data from other types of insurance to set a price.

Celebrities’ body parts: Policies on Keith Richards’ hands and David Beckham’s legs are excellent tabloid fodder, but also a great example of how actuaries are able to price rare events.

First few years of cyber insurance: Claims data was sparse in the 1970’s, when this product was first conceived, but there was money to be made. Insurance companies set initial prices based on estimates and adjacent data. Prices were adjusted as claims data became available.

There are many more examples: bioterrorism, capital models, and reputation insurance to name a few.

How do actuaries do it?

Many professions, from cyber risk to oil and gas exploration, use the same estimation methods developed by actuaries hundreds of years ago. Find as much relevant historical data as possible - this can be adjacent data, such as the number of horse-drawn carriage crashes when setting a price for the first automobile policy - and bring it to the experts. Experts then apply reasoning, judgment, and their own experience to set insurance prices or estimate the probability of a data breach.

Subjective data encoded quantitatively isn’t bad! On the contrary, it’s very useful when there is deep uncertainty, sparse data, data is expensive to acquire or a new, emerging risk.

I’m always a little surprised when people reject better methods altogether, citing the lack of “perfect data,” then swing in the opposite direction to gut checks and wet finger estimation. The tools and techniques are out there to make cyber risk quantification not only possible but could give any company a competitive edge. Entire industries have been built around less than perfect data and we as cyber risk professionals should not use a lack of perfect data as an excuse not to quantify cyber risk. If there is a value placed on Tom Jones' chest hair then certainly we can predict the loss risk of a data incident... go ask the actuaries!

Better Security Metrics with Biff Tannen

Can your metrics pass the Biff Test? If a time-traveling dimwit like Back to the Future's Biff Tannen can fetch and understand your metric, it’s probably clear enough to guide better decisions.

In a previous post, I wrote about testing metrics with The Clairvoyant Test. In short, a metric is properly written if a clairvoyant, who only has the power of observation, can identify it.

Some people struggle with The Clairvoyant Test. They have a hard time grasping the rules: the clairvoyant can observe anything but cannot make judgments, read minds or extrapolate. It’s no wonder they have a hard time; our cultural view of clairvoyants is shaped by the fake ones we see on TV. For example, Miss Cleo, John Edward, and Tyler “The Hollywood Medium” Henry often do make personal judgments and express opinions about future events. Almost every clairvoyant we see in movies and TV can read minds. I think people get stuck on this, and often will declare metrics or measurements as incorrectly passing The Clairvoyant Test due to the cultural perception that clairvoyants know everything.

Since this is a cultural problem and not a technical one, is there a better metaphor we can use? Please allow me to introduce you to Biff Tannen.

Meet Biff

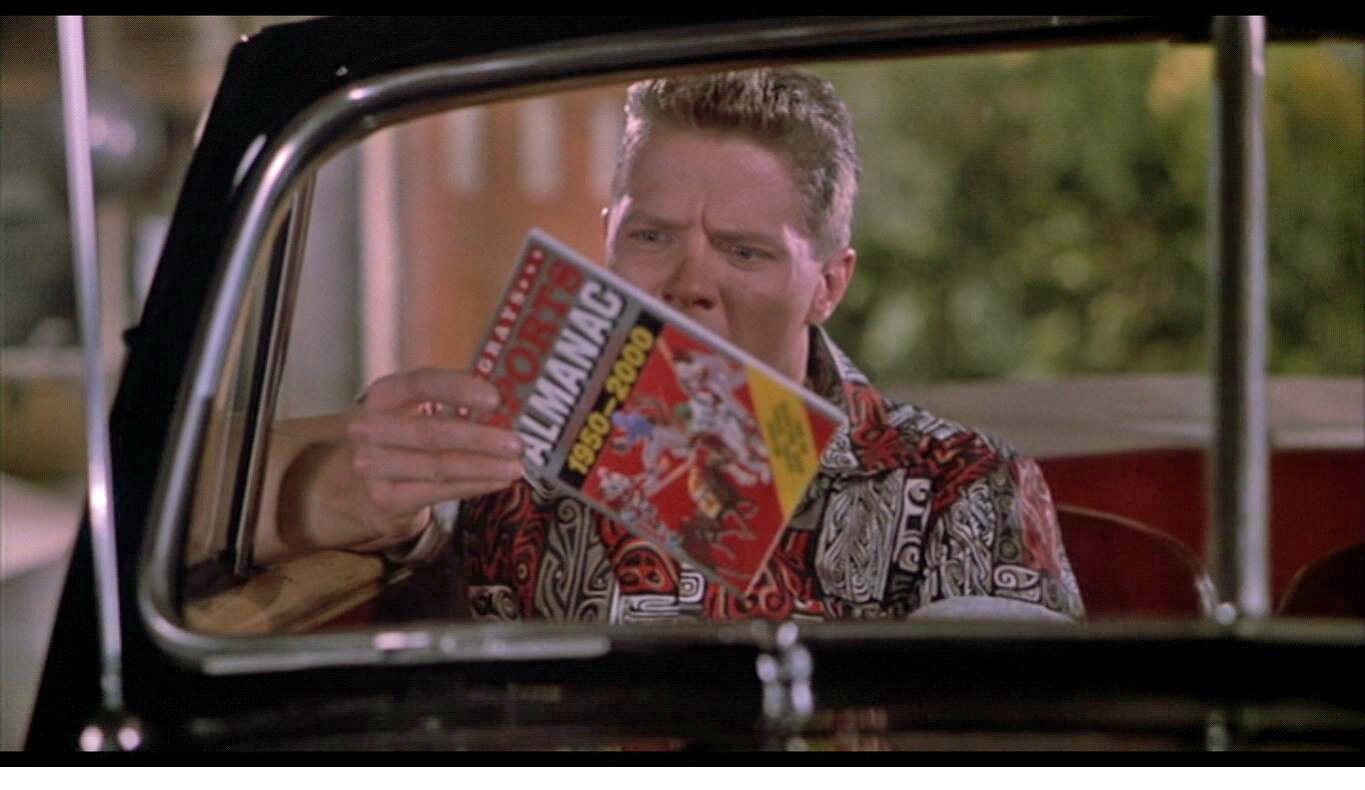

Biff Tannen is the main villain in all three Back to the Future movies. In Back to the Future II, Biff steals Doc’s time-traveling DeLorean in 2015 for nefarious reasons. Among other shenanigans, 2015 Biff gives a sports almanac to 1955 Biff, providing the means for him to become a multi-millionaire and ruining Hill Valley in the process.

If you recall, Biff has the following negative characteristics:

He’s a dullard and has no conjecture abilities

He lacks common sense

He has little judgment or decision-making capabilities

He’s a villain, so you can’t trust him

But...

He has access to Doc’s time-traveling DeLorean so he can go to any point in the future and fetch, count, look up or directly observe something for you.

Here’s how Biff Tannen can help you write better metrics: if Biff can understand and fetch the value using his time-traveling DeLorean, it’s a well-written metric. Metrics have to be clear, unambiguous, directly observable, quantifiable, and not open to interpretation.

You should only design metrics that are Biff-proof. Biff gets stuck on ambiguity, abstractions and can only understand concepts that are right in front of him, such as the sports almanac. He can only count through observation due to low intelligence and lacks judgment and common sense. If you design a metric that Biff Tannen can fetch for you, it will be understood and interpreted by your audience. That’s the magic in this.

How to Use the Biff Test: A Few Examples

Metric: % of vendors with adequate information security policies

Biff cannot fetch this metric for you; he has no judgment or common sense. He will get stuck on the word “adequate” and not know what to do. Your audience, reading the same metric, will also get confused and this opens the measurement up to different interpretations. Let’s rewrite:

New Metric: % of vendors with information security policies in compliance with the Company’s Vendor Security Policy

The re-written metric assumes there is a Vendor Security Policy that describes requirements. The new metric is unambiguous and clear. Biff – with his limited abilities – can fetch it.

Metric: % of customer disruption due to downtime

This one is slightly more complex but perhaps seen on many lists of company metrics. Biff would not be able to fetch this metric for us. “Disruption” is ambiguous, and furthermore, think about the word“downtime.” Downtime of what? How does that affect customers? Let’s re-write this into a series of metrics that show the total picture when shown as a set.

New Metrics:

Total uptime % on customer-facing systems

% customer-facing systems meeting uptime SLAs

Mean-time to repair (RTTR) on customer-facing systems

# of abandoned customer shopping carts within 24 hours following an outage

Biff can fetch the new metric and non-IT people (your internal customers!) will be able to interpret and understand them.

Metric: % of critical assets that have risk assessments performed at regular intervals

Biff doesn’t have judgment and gets confused at “regular intervals.” He wonders, what do they mean by that? Could “regular” mean once a week or every 10 years?

New Metric: % of critical assets that have risk assessments performed at least quarterly

The rewritten metric assumes that “critical asset” and “risk assessment” have formal definitions in policies. If so, one small tweak and now it passes the Biff Test.

Conclusion and Further Work

Try this technique with the next security metric you write and anything else you are trying to measure, such as OKR’s, performance targets, KRIs and KPIs.

I often ask a lay reader to review my writing to make sure it's not overly technical and will resonate with broad audiences. For this same reason, we would ask Biff - an impartial observer with a time machine - to fetch metrics for us.

Of course, I’m not saying your metric consumers are as dull or immoral as Biff Tannen, but the metaphor does make a good proxy for the wide range of skills, experience, and backgrounds that you will find in your company. A good metric that passes the test means that it’s clear, easy to understand and will be interpreted the same way by the vast majority of people. Whether you use the Biff or the Clairvoyant Test, these simple thought exercises will help you write crisp and clear metrics.

Better Security Metrics with the Clairvoyant Test

Think your security metrics are clear? Put them to the test—The Clairvoyant Test—a thought experiment that strips away ambiguity, subjectivity, and fuzziness to make sure your metrics are measurable, observable, and decision-ready.

"Clairvoyant at Whitby" by Snapshooter46 is licensed under CC BY-NC-SA 2.0

There’s an apocryphal business quote from Drucker, Demmings, or maybe even Lord Kelvin that goes something like this: “You can’t manage what you don’t measure.” I’ll add that you can’t measure what you don’t clearly define.

Clearly defining the object of measurement is where many security metrics fail. I’ve found one small trick borrowed from the field of Decision Science that helps in the creation and validation of clear, unambiguous, and succinct metrics. It’s called The Clairvoyant Test, and it’s a 30-second thought exercise that makes the whole process quick and easy.

What is the Clairvoyant Test?

The Clairvoyant Test was first introduced in 1975 as a decision analysis tool in a paper titled “Probability Encoding in Decision Analysis” by Spetzler and Von Holstein. It’s intended to be a quick critical thinking tool to help form questions that ensure what we want to measure is, in reality, measurable. It’s easily extended to security metrics by taking the metric description or definition and passing it through the test.

The Clairvoyant Test supposes that one can ask a clairvoyant to gather the metric, and if they are able to fetch it, it is properly formed and defined. In real life, the clairvoyant represents the uninformed observer in your company.

There’s a catch, and this is important to remember: the clairvoyant only has the power of observation.

The Catch: Qualities of the Clairvoyant

The clairvoyant can only view events objectively through a crystal ball (or whatever it is clairvoyants use).

They cannot read minds. The clairvoyant’s powers are limited to what can be observed through the crystal ball. You can’t ask the clairvoyant if someone is happy, if training made them smarter, or if they are less likely to reuse passwords over multiple websites.

The clairvoyant cannot make judgments. For example, you can’t ask if something is good, bad, effective, or inefficient.

They can only observe. Questions posed to the clairvoyant must be framed as observables. If your object of measurement can’t be directly observed, decompose the problem until it can be.

They cannot extrapolate. The clairvoyant cannot interpret what you may or not mean, offer conjecture or fill in the gaps of missing information. In other words, they can only give you data.

What’s a well-designed metric that passes the Clairvoyant Test?

A well-designed metric has the following attributes:

Unambiguous: The metric is clearly and concisely written; in fact, it is so clear and so concise that there is very little room for interpretation. For example, the number of red cars on Embarcadero St. between 4:45 and 5:45 pm will be interpreted the same way by the vast majority of people.

Objective: Metrics avoid subjective judgments, such as “effective” or “significant.” Those words mean different things to different people and can vary greatly across age, experience, cultural, and language backgrounds.

Quantitative: Metrics need to be quantitative measurements. “Rapid deployment of critical security patches” is not quantitative; “Percentage of vulnerabilities with an EPSS probability of 80% of higher remediated within ten days” is.

Observable: The metrics need to be designed so that anyone, with the right domain knowledge and access, can directly observe the event you are measuring.

A few examples…

Let’s try a few common metrics and pass through The Clairvoyant Test to see if they’re measurable and written concisely.

Metric: % of users with privileged access

The clairvoyant would not be able to reveal the value of the metric. “Privileged access” is a judgment call and means different things to different people. The clairvoyant would also need to know what system to look into. Let’s rewrite:

New Metric: % of users with Domain Admin on the production Active Directory domain

The new metric is objective, clear, and measurable. Additional systems and metrics (root on Linux systems, AWS permissions, etc.) can be aggregated.

Let’s try a metric that is a little harder:

Metric: Percentage of vendors with effective cybersecurity policies.

The clairvoyant would not be able to reveal this either – “effective” is subjective, and believe it or not – a cybersecurity policy is not the same across all organizations. Some have a 50-page documented program, others have a 2-page policy, and even others would provide a collection of documents: org chart, related policies, and a 3-year roadmap. Rewritten, “effective” needs to be defined, and “policy” needs to be decomposed. For example, a US-based bank could start with this:

New Metric: % of vendors that have a written and approved cybersecurity policy that adheres to FFIEC guidelines.

This metric is a good starting point but needs further work – the FFIEC guidelines by themselves don’t pass The Clairvoyant Test, but we’re getting closer to something that does. We can now create an internal evaluation system or scorecard for reviewing vendor security policies. In this example, keep decomposing the problem and defining attributes until it passes The Clairvoyant Test.

Conclusion and Further Work

Do your security metrics pass The Clairvoyant Test? If they don’t, you may have a level of ambiguity that leads to audience misinterpretation. Start with a few metrics and try rewriting them. You will find that clearly stated and defined metrics leads to a security program that is easier to manage.

Probability & the words we use: why it matters

When someone says there's a "high risk of breach," what do they really mean? This piece explores how fuzzy language sabotages decision-making—and how risk analysts can replace hand-wavy terms with probabilities that actually mean something.

The medieval game of Hazard

So difficult it is to show the various meanings and imperfections of words when we have nothing else but words to do it with. -John Locke

A well-studied phenomenon is that perceptions of probability vary greatly between people. You and I perceive the statement “high risk of an earthquake” quite differently. There are so many factors that influence this disconnect: one’s risk tolerance, events that happened earlier that day, cultural and language considerations, background, education, and much more. Words sometimes mean a lot, and other times, convey nothing at all. This is the struggle of any risk analyst when they communicate probabilities, forecasts, or analysis results.

Differences in perception can significantly impact decision making. Some groups of people have overcome this and think and communicate probabilistically - meteorologists and bookies come to mind, but other areas such as business, lag far behind. My position has always been that if business leaders can start to think probabilistically, like bookies, significantly better risk decisions can be made, yielding an advantage over their competitors. I know from experience, however, that I need to first convince you there’s a problem.

The Roulette Wheel

A pre-COVID trip to Vegas reminded me of the simplicity in betting games and their usefulness in explaining probabilities. Early probability theory was developed to win at dice games, like hazard - a precursor to craps - not to advance the field of math.

Imagine this scenario: we walk into a Las Vegas casino together and I place $2,000 on black on the roulette wheel. I ask you, “What are my chances of winning?” How would you respond? It may be one of the following:

You have a good chance of winning

You are not likely to win

That’s a very risky bet, but it could go either way

Your probability of winning is 47.4%

Which answer above is most useful when placing a bet? The last one, right? But, which answer is the one you are most likely to hear? Maybe one of the first three?

All of the above could be typical answers to such a question, but the first three reflect attitudes and personal risk tolerance, while the last answer is a numerical representation of probability. The last one is the only one that should be used for decision making; however, the first three examples are how humans talk.

I don’t want us all walking around like C3PO, quoting precise odds of successfully navigating an asteroid field at every turn, but consider this: not only is “a good chance of winning” not helpful, you and I probably have a different idea of what “good chance” means!

The Board Meeting

Let’s move from Vegas to our quarterly Board meeting. I've been in many situations where metaphors are used to describe probabilities and then used to make critical business decisions. A few recent examples that come to mind:

We'll probably miss our sales target this quarter.

There's a snowball's chance in hell COVID infection rates will drop.

There's a high likelihood of a data breach on the customer database.

Descriptors like the ones above are the de facto language of forecasting in business: they're easy to communicate, simple to understand, and do not require a grasp of probability - which most people struggle with. There's a problem, however. Research shows that our perceptions of probability vary widely from person to person. Perceptions of "very likely" events are influenced by many factors, such as gender, age, cultural background, and experience. Perceptions are further influenced by the time of day the person is asked to make the judgment, a number you might have heard recently that the mind anchors to, or confirmation bias (a tendency to pick evidence that confirms our own beliefs).

In short, when you report “There's a high likelihood of a data breach on the customer database” each Board member interprets “high likelihood” in their own way and makes decisions based on the conclusion. Any consensus about how and when to respond is an illusion. People think they’re on the same page, but they are not. The CIA and the DoD noticed this problem in the 1960’s and 1970’s and set out to study it.

The CIA’s problem

One of the first papers to tackle this work is a 1964 CIA paper, Words of Estimative Probability by Sherman Kent. It's now declassified and a fascinating read. Kent takes the reader through how problems arise in military intelligence when ambiguous phrases are used to communicate future events. For example, Kent describes a briefing from an aerial reconnaissance mission.

Aerial reconnaissance of an airfield

Analysts stated:

"It is almost certainly a military airfield."

"The terrain is such that the [redacted] could easily lengthen the runways, otherwise improve the facilities, and incorporate this field into their system of strategic staging bases. It is possible that they will."

"It would be logical for them to do this and sooner or later they probably will."

Kent describes how difficult it is to interpret these statements meaningfully; not to mention, make strategic military decisions.

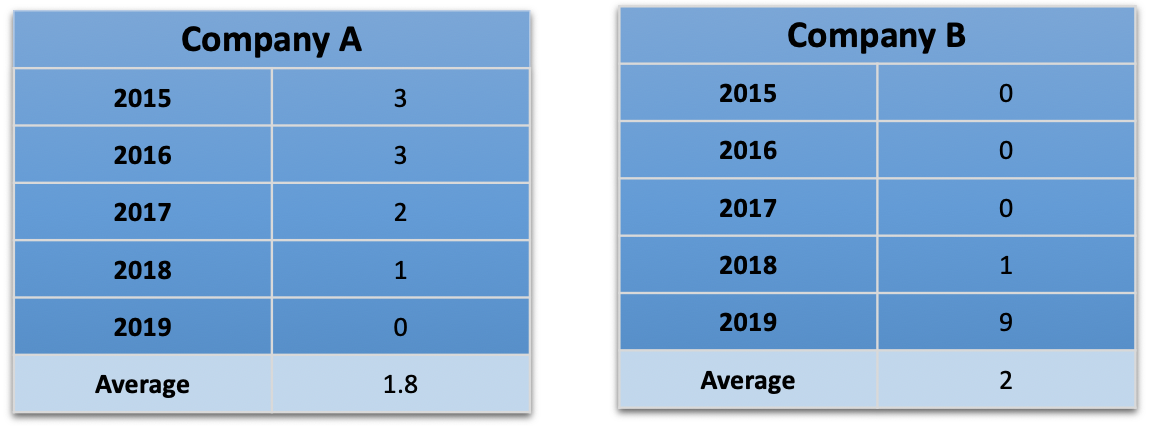

The next significant body of work on this subject is "Handbook for Decision Analysis" by Scott Barclay et al for the Department of Defense. A now-famous 1977 study was conducted on 23 NATO officers, asking them to match probabilities, articulated in percentages, to probability statements. The officers were given a series of 16 statements, including:

It is highly likely that the Soviets will invade Czechoslovakia.

It is almost certain that the Soviets will invade Czechoslovakia.

We believe that the Soviets will invade Czechoslovakia.

We doubt that the Soviets will invade Czechoslovakia.

Only the probabilistic words in bold (emphasis added) were changed across the 16 statements. The results may be surprising:

"Handbook for Decisions Analysis" by Scott Barclay et al for the Department of Defense, 1977

It is obvious that the officers' perceptions of probabilities are all over the place. For example, there's an overlap with "we doubt" and "probably," and the inconsistencies don't stop there. The most remarkable thing is that this phenomenon isn't limited to 23 NATO officers - take any group of people, ask them the same questions, and you will see very similar results.

Can you imagine trying to plan for the Soviet invasion of Czechoslovakia, literal life and death decisions, and having this issue? Let’s suppose intelligence states there's a “very good chance” of an invasion occurring. One officer thinks “very good chance” feels about 50/50 - a coin flip. Another thinks that’s a 90% chance. They both nod in agreement and continue war planning!

Can I duplicate the experiment?

I recently discovered a massive, crowdsourced version of the NATO officer survey called www.probabilitysurvey.com. The website collects perceptions of probabilistic statements, then shows an aggregated view of all responses. I took the survey to see if I agreed with the majority of participants, or if I was way off base.

My perceptions of probabilities (from www.probabilitysurvey.com). The thick black bars are my answers

I was surprised that some of my responses were so different than others, yet others were in line with everyone else. I work with probabilities every day and work with people to translate what they think is possible, and probable, to probabilistic statements. Thinking back, I consider many terms in the survey as synonymous with each other, while others perceive slight variations.

This is even more proof that if you and I are in a meeting, talking about high likelihood events, we will have different notions of what that means, leading to mismatched expectations and inconsistent outcomes. This can destroy the integrity of a risk analysis.

What can we do?

We can't really "fix" this, per se. It's a condition, not a problem. It's like saying, "We need to fix the problem that everyone has a different idea of what 'driving fast' means." We need to recognize that perceptions vary among people and adjust our own expectations accordingly. As risk analysts, we need to be intellectually honest when we present risk forecasts to business leaders. When we walk into a room and say “ransomware is a high likelihood event,” we know that every single person in the room hears “high” differently. One may think it’s right around the corner and someone else may that’s a once-every-ten-years event and have plenty of time to mitigate.

That’s the first step. Honesty.

Next, start thinking like a bookie. Experiment with using mathematical probabilities to communicate future events in any decision, risk, or forecast. Get to know people and their backgrounds; try out different techniques with different people. For example, someone who took meteorology classes in college might prefer probabilities and someone well-versed in gambling might prefer odds. Factor Analysis of Information Risk (FAIR), an information risk framework, uses frequencies because it’s nearly universally understood.

For example,

"There's a low likelihood of our project running over budget."

Becomes…

There's a 10% chance of our project running over budget.

Projects like this one, in the long run, will run over budget about once every 10 years.

Take the quiz yourself on www.probabilitysurvey.com. Pass it around the office and compare results. Keep in mind there is no right answer; everyone perceives probabilistic language differently. If people are sufficiently surprised, test out using numbers instead of words.

Numbers are unambiguous and lead to clear objectives, with measurable results. Numbers need to become the new de facto language of probabilities in business. Companies that are able to forecast and assess risk using numbers instead of soft, qualitative adjectives, will have a true competitive advantage.

Resources

Words of Estimative Probability by Sherman Kent

Handbook for Decisions Analysis by Scott Barclay et al for the Department of Defense

Take your own probability survey

Thinking Fast and Slow by Daniel Kahneman | a deep exploration into this area and much, much more

Recipe for passing the OpenFAIR exam

Thinking about the OpenFAIR certification? Here's a practical, no-fluff study guide to help you prep smarter—not harder—and walk into the exam with confidence.

Passing and obtaining the OpenGroup’s OpenFAIR certification is a big career booster for information risk analysts. Not only does it look good on your CV, it demonstrates your mastery of FAIR to current and potential employers. It also makes a better analyst because it deepens one’s understanding of risk concepts that may not be often used. I passed the exam myself a while back, and I’ve also helped people prepare and study for it. This is my recipe for studying for and passing the OpenFAIR exam.

What to study

The first thing you need to understand in order to pass the exam is that the certification is based on the published OpenFAIR standard, last updated in 2013. Many people and organizations - bloggers, risk folks on Twitter, the FAIR Institute, me, Jack Jones himself - have put their own spin and interpretation on FAIR in the years since the standard was published. Reading this material is important to becoming a good risk analyst but it won’t help you pass the exam. You need to study and commit to memory the OpenFAIR standard. If you find contradictions in later texts, favor the OpenFAIR documentation.

Now, get your materials

The two most important texts are:

Open Risk Taxonomy Technical Standard (O-RT) - free, registration required

Open Risk Analysis Technical Standard (O-RA) - free, registration required

Two more optional texts, but highly recommended:

OpenFAIR Foundation Study Guide - $29.95

Measuring and Managing Information Risk: A FAIR Approach by Jack Freund and Jack Jones - book, $49.95 on Amazon

How to Study

This is how I recommend you study for the exam:

Thoroughly read the Taxonomy (O-RT) and Analysis (O-RA) standards, cover to cover. Use the FAIR book, blogs, and other papers you find to help answer questions or supplement your understanding, but use the PDF’s as your main study aid.

Start memorizing - there are only three primary items that require rote memorization; everything else is common sense if you have a mastery of the materials. Those items are:

The Risk Management Stack

You need to know what they are, but more importantly, you need to know them in order.

Accurate models lead to meaningful measurements, which lead to effective comparisons - you get the idea. The test will have several questions like, “What enables well-informed decisions?” Answer: effective comparisons. I never did find a useful mnemonic that stuck like Please Don’t Throw Sausage Pizzas Away, but try to come up with something that works for you.

The FAIR Model

You are probably already familiar with the FAIR model and how it works by now, but you need to memorize it exactly as it appears on the ontology.

The FAIR model (source: FAIR Institute)

It’s not enough to know that Loss Event Frequency is derived from Threat Event Frequency and Vulnerability - you need to know that Threat Event Frequency is in the left box and Vulnerability is on the right. Once a day, draw out 13 blank boxes and fill them in. The test will ask you to match various FAIR elements of risk on an empty ontology. You also need to know if each element is a percentage or a number. This should be easier to memorize if you have a true understanding of the definitions.

Forms of Loss

Last, you need to know the six forms of loss. You don’t need to memorize the order, but you definitely need to recognize these as the six forms of loss and have a firm understanding of the definitions.

Productivity Loss

Response Loss

Replacement Loss

Fines and Judgements

Competitive Advantage

Reputation Damage

Quiz Yourself

I really recommend paying the $29.95 for the OpenFAIR Foundation Study Guide PDF. It has material review, questions/answers at the end of each chapter, and several full practice tests. The practice tests are so similar (even the same, for many questions) to the real test, that if you ace the practice tests, you’re ready. Also, check out FAIR certification flashcards for help in understanding the core concepts.

When you think you’re ready, register for your exam for a couple of weeks out. This gives you time to keep taking practice tests and memorizing terms.

In Closing…

It’s not a terribly difficult test, but you truly need a mastery of the FAIR risk concepts to pass. I think if you have a solid foundation in risk analysis in general, it takes a few weeks to study, as opposed to months for the CRISC or CISSP.

Good luck with your FAIR journey! As always, feel free to reach out to me or ask questions in the comments below.

No, COVID-19 is not a Black Swan event*

COVID-19 isn’t a Black Swan—it was predicted, modeled, and even planned for. So why are so many leaders acting like turkeys on Thanksgiving?

*Unless you’re a turkey

It’s really a White Ostrich event

There’s a special kind of history re-writing going on right now among some financial analysts, risk managers, C-level leadership, politicians and anyone else responsible for forecasting and preparing for major business, societal and economic disruptions. We’re about 3 months into the COVID-19 outbreak and people are starting to declare this a “Black Swan” event. Not only is “Black Swan” a generally bad and misused metaphor, the current pandemic also doesn’t fit the definition. I think it’s a case of CYA.

Just a few of many examples:

Marketwatch quoted an advisory firm founder stating the stock market fall is a “black swan market drop.”

The Nation predicted on February 25, 2020 that “The Coronavirus Could Be the Black Swan of 2020.”

Forbes declared on March 19, 2020: COVID-19 is a Black Swan.

My LinkedIn and Twitter feed is filled with Black Swan declarations or predictions that the coming economic ramifications will be Black Swan events.

None of this is a Black Swan event. COVID-19, medical supply shortages, economic disaster – none of it.

Breaking Black Swans down

The term “Black Swan” became part of the business lexicon in 2007 with Nassim Taleb’s book titled The Black Swan: The Impact of the Highly Improbable. In it, Taleb describes a special kind of extreme, outlier event that comes as a complete surprise to the observer. The observer is so caught off-guard that rationalization starts to occur: they should have seen it all along.

According to Taleb, a Black Swan event has these three attributes:

“First, it is an outlier, as it lies outside the realm of regular expectations, because nothing in the past can convincingly point to its possibility. Second, it carries an extreme ‘impact’. Third, in spite of its outlier status, human nature makes us concoct explanations for its occurrence after the fact, making it explainable and predictable.”

Let’s take the Black Swan definition and fit it to everything that’s going on now.

“First, it is an outlier, as it lies outside the realm of regular expectations, because nothing in the past can convincingly point to its possibility.”

COVID-19 and all of the fallout, deaths, the looming humanitarian crisis, economic disaster and everything in-between is the opposite of what Taleb described. In risk analysis, we use past incidents to help inform forecasting of future events. We know a lot about past pandemics, how they happen and what occurs when they do. We’ve had warnings and analysis that the world is unprepared for a global pandemic. What is looming should also be of no surprise: past pandemics often significantly alter economies. A 2019 pandemic loss assessment by the World Health Organization (WHO) feels very familiar as well as many recent threat assessments that show this was expected in the near-term future. Most medium and large companies have pandemic planning and response as part of their business continuity programs. In other words, past is prologue. Everything in the past convincingly points to the possibility of a global pandemic.

Perhaps the details of COVID-19’s origins may be a surprise to some, but the relevant information needed for risk managers, business leaders and politicians to become resilient and prepare for these events should be of absolutely no surprise. It’s true that when is not knowable, but that’s is the purpose of risk analysis. We don’t ignore high impact, low probability events.

“Second, it carries an extreme ‘impact’.”

This might be the only aspect of what we’re currently experiencing that fits the Black Swan definition, but extreme impact alone does not make the COVID-19 pandemic a Black Swan. The COVID-19 impact today is self-evident, and what’s to come is foreseeable.

“Third, in spite of its outlier status, human nature makes us concoct explanations for its occurrence after the fact, making it explainable and predictable.”

When a true Black Swan occurs, according to Taleb, observers start rationalizing: oh, we should have predicted it, signs were there all along, etc. Think about what this means – before the Black Swan event it’s unfathomable; after, it seems completely reasonable.

We are seeing the exact opposite now. The select folks who are outright calling this a Black Swan aren’t rationalizing that it should have or could have been predicted; they are now saying it was completely unpredictable. From POTUS saying the pandemic “snuck up on us,” to slow response from business, there’s some revisionist thinking going on.

I’m not sure why people are calling this a Black Swan. I suspect it’s a combination of misunderstanding what a Black Swan is, politicians playing CYA and fund managers trying to explain to their customers why their portfolios have lost so much value.

It’s a Black Swan to turkeys

“Uncertainty is a feature of the universe. Risk is in the eye of the beholder.”

-Sam Savage

Taleb explains in his book that Black Swans are observer-dependent. To explain this point, he tells the story of the Thanksgiving turkey in his book.

“Consider a turkey that is fed every day. Every single feeding will firm up the bird's belief that it is the general rule of life to be fed every day by friendly members of the human race 'looking out for its best interests,' as a politician would say. On the afternoon of the Wednesday before Thanksgiving, something unexpected will happen to the turkey. It will incur a revision of belief.”

For the turkey, Thanksgiving is a Black Swan event. For the cook, it certainly is not. It’s possible that some are truly turkey politicians, risk managers and business executives in this global pandemic. However, I don’t think there are many. I think most happen to be a different kind of bird.

If the COVID-19 pandemic isn’t a Black Swan…

If the COVID-19 pandemic isn’t a Black Swan event, what is it? My friend and fellow risk analyst Jack Whitsitt coined phrase White Ostrich and had this to say:

I like Taleb’s book. It’s a fascinating read on risk and risk philosophy, but the whole Black Swan metaphor is misused, overused and doesn’t make much sense outside the parameters that he sets. I’ve written about the bad metaphor problem the context of cyber risk. I also recommend reading Russell Thomas’s blog post on different colored swans. It will illuminate the issues and problems we face today.

Book Review | The Failure of Risk Management: Why It's Broken and How to Fix It, 2nd Edition

Doug Hubbard’s The Failure of Risk Management ruffled feathers in 2012—and the second edition lands just as hard, now with more tools, stories, and real-world tactics. If you’ve ever been frustrated by heat maps, this book is your upgrade path to real, defensible risk analysis.

When the first edition of The Failure of Risk Management: Why It's Broken and How to Fix It by Douglas Hubbard came out in 2012, it made a lot of people uncomfortable. Hubbard laid out well-researched arguments that some of businesses’ most popular methods of measuring risk have failed, and in many cases, are worse than doing nothing. Some of these methods include the risk matrix, heat map, ordinal scales, and other methods that fit into the qualitative risk category. Readers of the 1st edition will know that the fix is, of course, methods based on mathematical models, simulations, data, and evidence collection. The 2nd edition, released in March 2020, builds on the work of the previous edition but brings it into 2020 with more contemporary examples of the failure of qualitative methods and tangible advice on how to incorporate quantitative methods into readers’ risk programs. If you considered the 1st edition required reading, as many people do (including myself), the 2nd edition is a worthy addition to your bookshelf because of the extra content.

The closest I’ll get to an unboxing video

The book that (almost) started it all

I don’t think it would be fair to Jacob Bernoulli’s 1713 book Ars Conjectandi to say that the first edition of The Failure of Risk Management started it all, but Hubbard’s book certainly brought concepts such as probability theory into the modern business setting. Quantitative methodologies have been around for hundreds of years, but in the 1980’s and ‘90’s people started to look for shortcuts around the math, evidence gathering, and critical thinking. Those companies starting using qualitative models (e.g., red/yellow/green, high/medium/low, heat maps) and these, unfortunately, became the de facto language of risk in most business analysis. Hubbard noticed this and carefully laid out an argument on why these methods are flawed and gave readers tangible examples of how to re-integrate quantitative methodologies into decision and risk analysis.

Hubbard eloquently reminds readers in Part Two of his new book all the reasons why qualitative methodologies have failed us. Most readers should be familiar with the arguments at this point and will find the “How to Fix It” portion of the book, Part Three, a much more interesting and compelling read. We can tell people all day how they’re using broken models, but if we don’t offer an alternative they can use, I fear arguments will fall on deaf ears. I can't tell you how many times I've seen a LinkedIn risk argument (yes, we have those) end with, “Well, you should have learned that in Statistics 101.” We’ll never change the world this way.

Hubbard avoids the dogmatic elements of these arguments and gives all readers actionable ways to integrate data-based decision making into risk programs. Some of the topics he covers include calibration, sampling methods for gathering data, an introduction to Monte Carlo simulations, and integrating better risk analysis methods into a broader risk management program. What's most remarkable isn't what he covers, but how he covers it. It’s accessible, (mostly) mathless, uses common terminology, and is loaded with stories and anecdotes. Most importantly, the reader can run quantitative risk analysis with Monte Carlo simulations from the comfort on their own computer with nothing more than Excel. I know that Hubbard has received criticism for using Excel instead of more typical data analysis software, such as Python or R, but I see this as a positive. With over 1.2 billion installs of Excel worldwide, readers can get started today instead of learning how to code and struggling with installing new software and packages. Anyone with motivation and a computer can perform quantitative risk analysis.

What’s New?

There are about 100 new pages in the second edition, with most being new content, but some readers will recognize concepts from Hubbard’s newer books, like the 2nd edition of How to Measure Anything and How to Measure Anything in Cybersecurity Risk. Some of the updated content includes:

An enhanced introduction, that includes commentary on the many of the failures of risk management that has occurred since the 1st edition was published, such as increased cyber-attacks and the Deepwater Horizon oil spill.

I was delighted to see much more content around how to get started in quantitative modeling in Part 1. Readers only need a desire to learn, and not a ton of risk or math experience to get started immediately.

Much more information is provided on calibration and how to reduce cognitive biases, such as the overconfidence effect.

Hubbard beefed up many sections with stories and examples, helping the reader connect even the most esoteric risk and math concepts to the real world.

Are things getting better?

It’s easy to think that things haven’t changed much. After all, most companies, frameworks, standards, and auditors still use qualitative methodologies and models. However, going back and leafing through the 1st edition and comparing it with the 2nd edition made me realize there has been significant movement in the last eight years. I work primarily in the cyber risk field, so I'm only going to speak to that subject, but the growing popularity of Factor Analysis of Information Risk (FAIR) – a quantitative cyber risk model – is proof that we are moving away from qualitative methods, albeit slowly. There are also two national conferences, FAIRcon and SIRAcon, that are dedicated to advancing quantitative cyber risk practices – both of which didn’t exist in 2012.

I'm happy that I picked up the second edition. The new content and commentary are certainly worth the money. If you haven’t read either edition and want to break into the risk field, I would add this to your required reading list and make sure you get the newer edition. The book truly changed the field for the better in 2012, and the latest edition paves the way for the next generation of data-driven risk analysts.

You can buy the book here.

Exploit Prediction Scoring System (EPSS): Good news for risk analysts

Security teams have long relied on CVSS to rank vulnerabilities—but it was never meant to measure risk. EPSS changes the game by forecasting the likelihood of exploitation, giving risk analysts the probability input we’ve been missing.

I'm excited about Exploit Prediction Scoring System (EPSS)! Most Information Security and IT professionals will tell you that one of their top pain points is vulnerability management. Keeping systems updated feels like a hamster wheel of work: update after update, yet always behind. It’s simply not possible to update all the systems all the time, so prioritization is needed. Common Vulnerability Scoring System (CVSS) provides a way to rank vulnerabilities, but at least from the risk analyst perspective, something more is needed. EPSS is what we’ve been looking for.

Hi CVSS. It’s not you, it’s me

Introduced in 2007, CVSS was the first mainstream model to tackle the vulnerability ranking problem and provide an open and easy-to-use model that offers a ranking of vulnerabilities. Security, risk, and IT people could then use the scores as a starting point to understand how vulnerabilities compare with each other, and by extension, prioritize system management.

CVSS takes a weighted scorecard approach. It combines base metrics (access vector, attack complexity, and authentication) with impact metrics (confidentiality, integrity, availability). Each factor is weighted and added together, resulting in a combined score of 0 through 10, with 10 being the most critical and needing urgent attention.

CVSS scores and rating

So, what’s the problem? Why do we want to break up with CVSS? Put simply, it’s a little bit of you, CVSS – but it’s mostly me (us). CVSS has a few problems: there are better models than a weighted scorecard ranked on an ordinal scale, and exploit complexity has seriously outgrown the base/impact metrics approach. Despite the problems, it’s a model that has served us well over the years. The problem lies with us; the way we use it, the way we've shoehorned CVSS into our security programs way beyond what it was ever intended to be. We’ve abused CVSS.

We use it as a de facto vulnerability risk ranking system. Keep in mind that risk, which is generally defined as an adverse event that negatively affects objectives, is made up of two components: the probability of a bad thing happening, and the impact to your objectives if it does. Now go back up and read what the base and impact metrics are: it’s not risk. Yes, they can be factors that comprise portions of risk, but a CVSS score is not risk on its own.

CVSS was never meant to communicate risk.

The newly released v3.1 adds more metrics on the exploitability of vulnerabilities, which is a step in the right direction. But, what if we were able to forecast future exploitability?

Why I like EPSS

If we want to change the way things are done, we can browbeat people with complaints about CVSS and tell them it’s broken, or we can make it easy for people to use a better model. EPSS does just that. I first heard about EPSS after Blackhat 2019 when Michael Roytman and Jay Jacobs gave a talk and released an accompanying paper describing the problem space and how their model solves many issues facing the field. In the time since, an online EPSS calculator as been released. After reading the paper and using the calculator on several real-world risk analysis, I’ve come to the conclusion that EPSS is easier and much more effective than using CVSS to prioritize remediation efforts based on risk. Some of my main takeaways on EPSS are:

True forecasting methodology: The EPSS calculation returns a probability of exploit in the next 12 months. This is meaningful, unambiguous – and most importantly – information we can take action on.

A move away from the weighted scorecard model. Five inputs into a weighted scorecard is not adequate to understand the full scope of harm a vulnerability can (or can’t) cause, considering system and exploit complexities.

Improved measurement: The creators behind EPSS created a model that inspects the attributes of a current vulnerability and compares it with the attributes of vulnerabilities in the past and whether or not they've been successfully exploited. This is the best indicator we have that will tell us whether not something is likely to be exploitable in the future. This will result in (hopefully) better vulnerability prioritization. This is an evolution from CVSS which measures attributes that may not be correlated to a vulnerability’s chance of exploit.

Comparisons: When using an ordinal scale, you can only make comparisons between items on that scale. By using probabilities, EPSS allows the analyst to compare anything: a system update, another risk that has been identified outside of CVSS, etc.

EPSS output (source: https://www.kennaresearch.com/tools/epss-calculator/)

In a risk analysis, EPSS significantly improves assessing the probability side of the equation. In some scenarios, a risk analyst can use this input directly, leaving only magnitude to work on. This speeds up the time to perform risk assessments over CVSS. Using CVSS as an input to help determine the probability of successful exploit requires a bit of extra work. For example, I would check to see if a Metasploit package was available, combine with past internal incident data and ask a few SME’s for adjustment. Admittedly crude and time-consuming, but it worked. I don't have to do this anymore.

There’s a caution to this, however. EPSS informs the probability portion only of a risk calculation. You still need to calculate magnitude by cataloging the data types on the system and determine the various ways your company could be impacted if the system was unavailable or the data disclosed.

Determining the probability of a future event is always a struggle, and EPSS significantly reduces the amount of work we have to do. I’m interested in hearing from other people in Information Security – is this significant for you as well? Does this supplement, or even replace, CVSS? If not, why?

Further Reading and Links:

Exploit Prediction Scoring System (EPSS) | BlackHat 2019 slides

Webinar: Predictive Vulnerability Scoring System | SIRA webinar series, behind member wall

EPSS Calculator from Kenna Research

San Francisco's poop statistics: Are we measuring the wrong thing?

Reports of feces in San Francisco have skyrocketed—but are we measuring actual incidents or just better reporting? This post breaks down the data, visualizations, and media narratives to ask whether we’re tracking the problem… or just the poop map.

In this post, I’m going to cover two kinds of shit. The first kind is feces on the streets of San Francisco that I’m sure everyone knows about due to abundant news coverage. The second kind is bullshit; specifically, the kind found in faulty data gathering, analysis, hypothesis testing, and reporting.

Since 2011, the SF Department of Public Works started tracking the number of reports and complaints about feces on public streets and sidewalks. The data is open and used to create graphs like the one shown below.

Source: Openthebooks.com

The graph displays the year-over-year number of citizen reports of human feces in the city. It certainly seems like it’s getting worse. In fact, the number of people defecating on the streets between 2001 and 2018 has increased by over 400%! This is confirmed by many news headlines reporting on the graph when it was first released. A few examples are:

Sure seems like a dismal outlook, almost a disaster fit for the Old Testament.

Or is it?

The data (number of reports of human feces) and the conclusion drawn from it (San Francisco is worse than ever) makes my measurement spidey sense tingle. I have a few questions about both the data and the report.

Does the data control for the City’s rollout of the 411 mobile app, which allows people to make reports from their phone?

Has the number of people with mobile phones from 2011 to the present increased?

Do we think the City’s media efforts to familiarize people with 411, the vehicle for reporting poop, could contribute to the increase?

The media loves to report on the poop map and poop graph as proof of San Francisco’s decline. Would extensive media coverage contribute to citizen awareness that it can be reported, therefore resulting in an increase in reports?

Is it human poop? (I know the answer to this: not always. Animal poop and human poop reports are logged and tagged together in City databases.)

Does the data control for multiple reports of the same pile? 911 stats have this problem; 300 calls about a car accident doesn’t mean there were 300 car accidents.

Knowing that a measurement and subsequent analysis starts with a well-formed question, we have to ask: are we measuring the wrong thing here?

I think we are!

Perhaps a better question we can answer with this data is: what are the contributing factors that may show a rise in feces reports?

A more honest news headline might read something like this: Mobile app, outreach efforts leads to an increase in citizens reporting quality of life issues

Here’s another take on the same data:

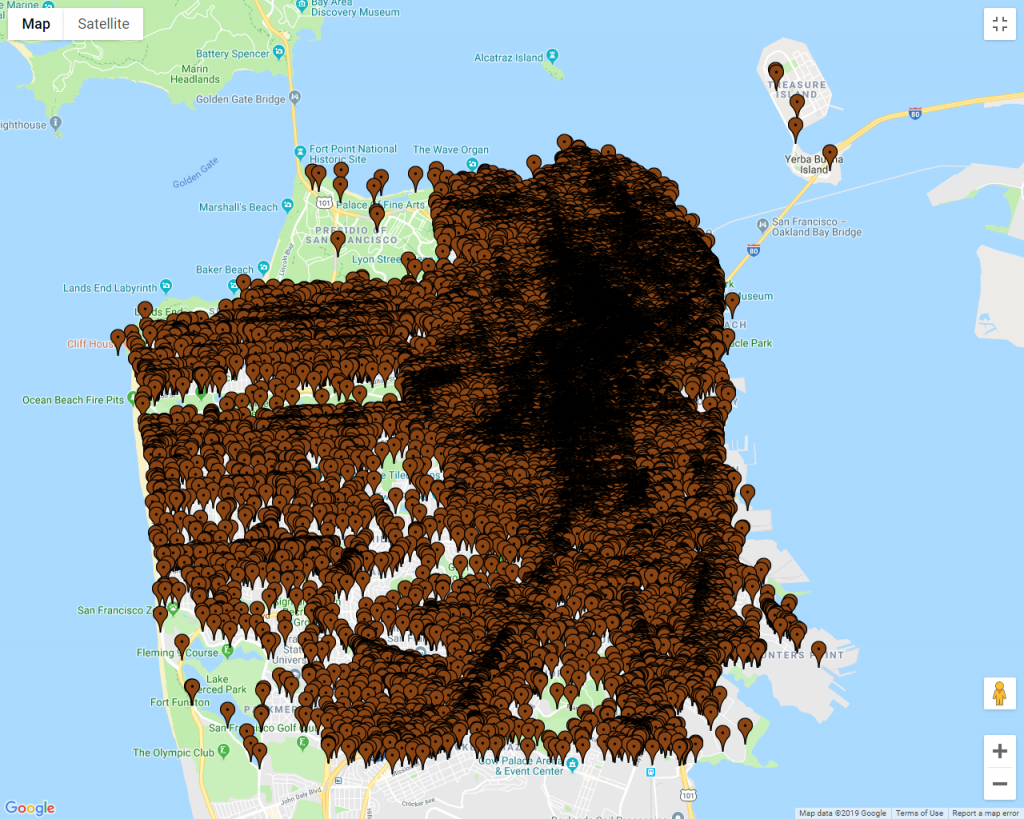

Locations of all poop reports from 2011 to 2018. Source: Openthebooks.com

At first glance, the reader would come to the conclusion that San Francisco is covered in poop - literally. The entire map is covered! The publishing of this map led to this cataclysmic headline from Fox News: San Francisco human feces map shows waste blanketing the California city.

Fox’s Tucker Carlson declared San Francisco is proof that “Civilization Itself Is Coming Apart” and often references the poop map as proof.

Let’s pull this map apart a little more. The map shows 8 years of reports on one map - all the years are displayed on top of each other. That’s a problem. It’s like creating a map of every person, living or dead, that’s ever lived in the city of London from 1500AD to present and saying, “Look at his map! London is overpopulated!” A time-lapse map would be much more appropriate in this case.

Here’s another problem with the map: a single pin represents one poop report. Look at the size of the pin and what it’s meant to represent in relation to the size of the map. Is it proportional? It is not! Edward Tufte, author of “The Visual Display of Quantitative Information” calls this the Lie Factor.

Upon defining the Lie Factor, the following principle is stated in his book:

The representation of numbers, as physically measured on the surface of the graphic itself, should be directly proportional to the quantities represented.

In other words, the pin is outsized. It’s way bigger than the turd it’s meant to represent, relative to the map. No wonder the map leads us to think that San Francisco is blanketed in poop.

I’m not denying that homelessness is a huge problem in San Francisco. It is. However, these statistics and headlines are hardly ever used to improve the human condition or open a dialog about why our society pushes people to the margins. It’s almost always used to mock and poke fun at San Francisco.

There’s an Information Security analogy to this. Every time I see an unexpected, sharp increase in anything, whether it’s phishing attempts or lost laptops, I always ask this: What has changed in our detection, education, visibility, logging and reporting capabilities? It’s almost never a drastic change in the threat landscape and almost always a change in our ability to detect, recognize and report incidents.

My 2020 Cyber Predictions -- with Skin in the Game!

Most cybersecurity predictions are vague and unaccountable — but not these. I made 15 specific, measurable forecasts for 2020, added confidence levels, and pledged a donation to the EFF for every miss. Let’s see how it played out.

It’s the end of the year and that means two things: the year will be declared the “Year of the Data Breach” again (or equivalent hyperbolic headline) and <drumroll> Cyber Predictions! I react to yearly predictions with equal parts of groan and entertainment.

Some examples of 2020 predictions I’ve seen so far are:

Security awareness will continue to be a top priority.

Cloud will be seen as more of a threat.

Attackers will exploit AI.

5G deployments will expand the attack surface.

The US 2020 elections will see an uptick in AI-generated fake news.

They’re written so generically that they could hardly be considered predictions at all.

I should point out that these are interesting stories that I enjoy reading. I like seeing general trends and emerging threats from cybersecurity experts. However, when compared against forecasts and predictions that we’re accustomed to seeing such as, a 40% chance of rain or the Eagles’ odds are 10:1 to win, end of year predictions are vague, unclear and unverifiable.

They’re worded in such a way that the person offering up the prediction could never be considered wrong.

Another problem is that no one ever goes back to grade their prior predictions to see if they were accurate or not. What happened with all those 2019 predictions? How accurate were they? What about individual forecasters – which ones have a high level of accuracy, and therefore, deserve our undivided attention in the coming years? We don’t know!

I’ve decided to put my money where my big mouth is. I’m going to offer up 10 cyber predictions, with a few extra ones thrown in for fun. All predictions will be phrased in a clear and unambiguous manner. Additionally, they will be quantitatively and objectively measurable. Next year, anyone with access to Google will be able to independently grade my predictions.

Methodology

There are two parts to the prediction:

The Prediction: “The Giants will win Game 2 of the 2020 World Series.” The answer is happened/didn’t happen and is objectively knowable. At the end of 2020, I’ll tally up the ones I got right.

My confidence in my prediction. This ranges from 50% (I’m shaky; I might as well trust a coin flip) to 100% (a sure thing). The sum of all percentages is the number I expect to get right. People familiar with calibrated probability assessments will recognize this methodology.

The difference between the actual number correct and expected number correct is an indicator of my overconfidence or underconfidence in my predictions. For every 10th of a decimal point my expected correct is away from my actual correct, I’ll donate $10 to the Electronic Frontier Foundation. For example, if I get 13/15 right, and I expected to get 14.5 right, that’s a $150 donation.

My Predictions

Facebook will ban political ads in 2020, similar to Twitter’s 2019 ban.

Confidence: 50%

By December 31, 2020 none of the 12 Russian military intelligence officers indicted by a US federal grand jury for interference in the 2016 elections will be arrested.

Confidence: 90%

The Jabberzeus Subjects – the group behind the Zeus malware massive cyber fraud scheme – will remain at-large and on the FBI’s Cyber Most Wanted list by the close of 2020.

Confidence: 90%The total number of reported US data breaches in 2020 will not be greater than the number of reported US data breaches in 2019. This will be measured by doing a Privacy Rights Clearinghouse data breach occurrence count.

Confidence: 70%The total number of records exposed in reported data breaches in the US in 2020 will not exceed those in 2019. This will be measured by adding up records exposed in the Privacy Rights Clearinghouse data breach database. Only confirmed record counts will apply; breaches tagged as “unknown” record counts will be skipped.

Confidence: 80%One or more companies in the Fortune Top 10 list will not experience a reported data breach by December 31, 2020.

Confidence: 80%The 2020 Verizon Data Breach Investigations Report will report more breaches caused by state-sponsored or nation state-affiliated actors than in 2019. The percentage must exceed 23% - the 2019 number.

Confidence: 80%By December 31, 2020 two or more news articles, blog posts or security vendors will declare 2020 the “Year of the Data Breach.”

Confidence: 90%Congress will not pass a Federal data breach law by the end of 2020.

Confidence: 90%By midnight on Wednesday, November 4th 2020 (the day after Election Day), the loser in the Presidential race will not have conceded to the victor specifically because of suspicions or allegations related to election hacking, electoral fraud, tampering, and/or vote-rigging.

Confidence: 60%

I’m throwing in some non-cyber predictions, just for fun. Same deal - I’ll donate $10 to the EFF for every 10th of a decimal point my expected correct is away from my actual correct.

Donald Trump will express skepticism about the Earth being round and/or come out in outright support of the Flat Earth movement. It must be directly from him (e.g. tweet, rally speech, hot mic) - cannot be hearsay.

Confidence: 60%Donald Trump will win the 2020 election.

Confidence: 80%I will submit a talk to RSA 2021 and it will be accepted. (I will know by November 2020).

Confidence: 50%On or before March 31, 2020, Carrie Lam will not be Chief Executive of Hong Kong.

Confidence: 60%By December 31, 2020 the National Bureau of Economic Research (NBER) will not have declared that the US is in recession.

Confidence: 70%

OK, I have to admit, I’m a little nervous that I’m going to end up donating a ton of money to the EFF, but I have to accept it. Who wants to join me? Throw up some predictions, with skin in the game!

The Most Basic Thanksgiving Turkey Recipe -- with Metrics!

Cooking turkey is hard — and that’s why I love it. In this post, I break down the most basic Thanksgiving turkey recipe and share how I use metrics (yes, real KPIs) to measure success and improve year over year.

I love Thanksgiving. Most cultures have a day of gratitude or a harvest festival, and this is ours. I also love cooking. I’m moderately good at it, so when we host Thanksgiving, I tackle the turkey. It brings me great joy, not only because it tastes great, but because it’s hard. Anyone who knows me knows I love hard problems. Just cooking a turkey is easy, but cooking it right is hard.

I’ve gathered decades of empirical evidence on how to cook a turkey from my own attempts and from observing my mother and grandmother. I treat cooking turkey like a critical project, with risk factors, mitigants and - of course - metrics. Metrics are essential to me because I can measure the success of my current cooking effort and improve year over year.

Turkey Cooking Objectives

Let’s define what we want to achieve. A successful Thanksgiving turkey has the following attributes:

The bird is thoroughly cooked and does not have any undercooked areas.

The reversal of a raw bird is an overcooked, dry one. It’s a careful balancing act between a raw and dry bird, with little margin for error.

Tastes good and is flavorful.

The bird is done cooking within a predictable timeframe (think side dishes. If your ETA is way off in either direction, you can end up with cold sides or a cold bird.)

Tony’s Turkey Golden Rules

Brining is a personal choice. It’s controversial. Some people swear by a wet brine, a dry brine, or no brine. There’s no one right way - each has pros, cons, and different outcomes. Practice different methods on whole chickens throughout the year to find what works for you. I prefer a wet brine with salt, herbs, and spices.

Nothing (or very little) in the cavity. It’s tempting to fill the cavity up with stuffing, apples, onions, lemons and garlic. It inhibits airflow and heat while cooking, significantly adding to total cooking time. Achieving a perfectly cooked turkey with a moist breast means you are cooking this thing as fast as possible.

No basting. Yes, basting helps keep the breast moist, but you’re also opening the oven many times, letting heat out - increasing the cooking time. I posit basting gives the cook diminished returns and can have the unintended consequence of throwing the side dish timing out of whack.

The Most Basic Recipe

Required Tools

Turkey lacer kit (pins and string)

Roasting pan

Food thermometer (a real one, not the pop-up kind)

Ingredients

Turkey

Salt

Herb butter (this is just herbs, like thyme, mixed into butter. Make this in the morning)

Prep Work

If frozen, make sure the turkey is sufficiently thawed. The ratio is 24 hours in the refrigerator for every 5 pounds.

Preheat the oven to 325 degrees Fahrenheit.

Remove the turkey from the packaging or brine bag. Check the cavity and ensure it’s empty.

Rub salt on the whole turkey, including the cavity. Take it easy on this step if you brined.

Loosen the skin on the breast and shove herb butter between the skin and meat.

Melt some of your butter and brush it on.

Pin the wings under the bird and tie the legs together.

Determine your cooking time. It’s about 13-15 minutes per pound (at 325F) per the USDA.

Optional: You can put rosemary, thyme, sage, lemons or apples into the cavity, but take it easy. Just a little bit - you don’t want to inhibit airflow.

Optional: Calibrate your oven and your kitchen thermometer for a more accurate cooking time range.

Cooking

Put the turkey in the oven

About halfway through the cooking time, cover the turkey breast with aluminum foil. This is the only time you will open the oven, other than taking temperature readings. This can be mitigated somewhat with the use of a digital remote thermometer.

About 10-15 minutes before the cooking time is up, take a temperature reading. I take two; the innermost part of the thigh and the thickest part of the breast. Watch this video for help.

Take the turkey out when the temperature reads 165 degrees F. Let it rest for 15-20 minutes.

Carving is a special skill. Here’s great guidance.

Metrics

Metrics are my favorite part. How do we know we met our objectives? Put another way - what would we directly observe that would tell us Thanksgiving was successful?

Here are some starting metrics:

Cooking time within the projected range: We want everything to be served warm or hot, so the turkey should be ready +/- 15 minutes within the projected total cooking time. Anything more, in either direction, is a risk factor. Think of the projected cooking time as your forecast. Was your forecast accurate? Were you under or overconfident?

Raw: This is a binary metric; it either is, or it isn’t. If you cut into the turkey and there are pink areas, something went wrong. Your thermometer is broken, needs calibration, or you took the temperature wrong.

Is the turkey moist, flavorful, and enjoyable to eat? This is a bit harder because it’s an intangible. We know that intangibles can be measured, so let’s give it a shot. Imagine two families sitting down for Thanksgiving dinner: Family #1 has a dry, gross, overcooked turkey. Family #2 has a moist, perfectly cooked turkey. What differences are we observing between families?

% of people that take a second helping. This has to be a range because some people will always get seconds, and others will never, regardless of how dry or moist it is. In my family, everyone is polite and won’t tell me it’s dry during the meal, but if the percentage of second helpings is less than prior observations (generally, equal to or less than 20%), there’s a problem. There’s my first KPI (key performance indicator).

% of people that use too much gravy. This also has to be a range because some people drink gravy like its water, and others hate it. Gravy makes dry, tasteless turkey taste better. I know my extended family very well, and if the percentage of people overusing gravy exceeds 40%, it’s too dry. Keep in mind that “too much gravy” is subjective and should be rooted in prior observations.

% of kids that won’t eat the food. Children under the age of 10 lack the manners and courteousness of their adult counterparts. It’s a general fact that most kids like poultry (McNuggets, chicken strips, chicken cheesy rice) and a good turkey should, at the very least, get picked at, if not devoured by a child 10 or under. If 50% or more of kids in my house won’t take a second bite, something is wrong.

% of leftover turkey that gets turned into soup, or thrown out. Good turkey doesn’t last long. Bad turkey gets turned into soup or thrown out after a few days in the refrigerator. In my house, if 60% or more of leftovers don’t get directly eaten within four days, it wasn’t that good.

Bonus: Predictive Key Risk Indicator. In late October, if 50% or more of your household is lobbying for you to “take it easy this year” and “just get Chinese takeout,” your Thanksgiving plan is at risk. In metrics and forecasting, past is prologue. Last year’s turkey didn’t turn out well!

Adjust all of the above thresholds to control for your own familial peculiarities: picky eaters, never/always eat leftovers (regardless of factors), a bias for Chinese takeout, etc.

With these tips, you are more likely to enjoy a delicious and low-risk holiday. Happy Thanksgiving!

Improve Your Estimations with the Equivalent Bet Test

Overconfident estimates can wreck a risk analysis. The Equivalent Bet Test is a simple thought experiment—borrowed from decision science and honed by bookies—that helps experts give better, more calibrated ranges by putting their assumptions to the test.

“The illusion that we understand the past fosters overconfidence in our ability to predict the future.”

― Daniel Kahneman, Thinking Fast and Slow

A friend recently asked me to teach him the basics of estimating values for use in a risk analysis. I described the fundamentals in a previous blog post, covering Doug Hubbard’s Measurement Challenge, but to quickly recap: estimates are best provided in the form of ranges to articulate uncertainty about the measurement. Think of the range as wrapping an estimate in error bars. An essential second step is asking the estimator their confidence that the true value falls into their range, also known as a confidence interval.

Back to my friend: after a quick primer, I asked him to estimate the length of a Chevy Suburban, with a 90% confidence interval. If the true length, which is easily Googleable, is within his range, I’d buy him lunch. He grinned at me and said, “Ok, Tony – the length of a Chevy Suburban is between 1 foot and 50 feet. Now buy me a burrito.” Besides the obvious error I made in choosing the wrong incentives, I didn't believe the estimate he gave me reflected his best estimate. A 90% confidence interval, in this context, means the estimator is wrong 10% of the time, in the long run. His confidence interval is more like 99.99999%. With a range as impossibly absurd as that, he is virtually never wrong.

I challenged him to give a better estimate – one that truly reflected a 90% confidence interval, but with a free burrito in the balance, he wasn’t budging.

If only there were a way for me to test his estimate. Is there a way to ensure the estimator isn’t providing impossibly large ranges to ensure they are always right? Conversely, can I also test for ranges that are too narrow? Enter the Equivalent Bet Test.

The Equivalent Bet Test

Readers of Hubbard’s How to Measure Anything series or Jones and Freund’s Measuring and Managing Risk: A FAIR Approach are familiar with the Equivalent Bet Test. The Equivalent Bet Test is a mental aid that helps experts give better estimates in a variety of applications, including risk analysis. It’s just one of several tools in a risk analyst’s toolbox to ensure subject matter experts are controlling for the overconfidence effect. Being overconfident when giving estimates means one’s estimates are wrong more often than they think they are. The inverse is also observed, but not as common: underconfidence means one’s estimates are right more often than the individual thinks they are. Controlling for these effects, or cognitive biases is called calibration. An estimator is calibrated when they routinely give estimates with a 90% confidence interval, and in the long run, they are correct 90% of the time.

Under and overconfident experts can significantly impact the accuracy of a risk analysis. Therefore, risk analysts must use elicitation aids such as calibration quizzes, constant feedback on the accuracy of prior estimates and offering equivalent bets, all of which get the estimator closer to calibration.

The technique was developed by decision science pioneers Carl Spetzler and Carl-Axel Von Holstein and introduced in their seminal 1975 paper Probability Encoding in Decision Analysis. Spetzler and Von Holstein called this technique the Probability Wheel. The Probability Wheel, along with the Interval Technique and the Equivalent Urn Test, are some of several methods of validating probability estimates from experts described in their paper.

Doug Hubbard re-introduced the technique in his 2007 book How to Measure Anything as the Equivalent Bet Test and is one of the easiest to use tools a risk analyst has to test for the under and overconfidence biases in their experts. It’s best used as a teaching aid and requires a little bit of setup but serves as an invaluable exercise to get estimators closer to their stated confidence interval. After estimators learn this game and why it is so effective, they can play it in their head when giving an estimate.

Figure 1: The Equivalent Bet Test Game Wheel

How to Play

First, set up the game by placing down house money. The exact denomination doesn’t matter, as long as it's enough money that someone would want to win or lose. For this example, we are going to play with $20. The facilitator also needs a specially constructed game wheel, seen in Figure 1. The game wheel is the exact opposite of what one would see on The Price is Right: there’s a 90% chance of winning, and only a 10% chance of losing. I made an Equivalent Bet Test game wheel – and it spins! It's freely available for download here.

Here are the game mechanics:

The estimator places $20 down to play the game; the house also places down $20

The facilitator asks the estimator to provide an estimate in the form of a range of numbers, with a 90% confidence interval (the estimator is 90% confident that the true number falls somewhere in the range.)

Now, the facilitator presents a twist! Which game would you like to play?

Game 1: Stick with the estimate. If the true answer falls within the range provided, you win the house’s $20.

Game 2: Spin the wheel. 90% of the wheel is colored blue. Land in blue, win $20.

Present a third option: Ambivalence; the estimator recognizes that both games have an equal chance of winning $20; therefore, there is no preference.

Which game the estimator chooses reveals much about how confident they are about the given estimate. The idea behind the equivalent bet test is to test whether or not one is truly 90% confident about the estimation.

If Game One is chosen, the estimator believes the estimation has a higher chance of winning. This means the estimator is more than 90% confident; the ranges are too wide.

If Game Two is chosen, the estimator believes the wheel has a greater chance of winning – the estimator is less than 90% confident. This means the ranges are too tight.

The perfect balance would be that the estimator doesn’t care which game they play. Each has an equal chance of winning, in the estimators' mind; therefore, both games have a 90% chance of winning.

Why it Works

Insight into why this game helps the estimator achieve calibration can be had by looking at the world of bookmakers. Bookmakers are people who set odds and place bets on sporting and other events as a profession. Recall that calibration, in this context, is a measurement of the validity of one's probability assessment. For example: if an expert gives estimates on the probability of different types of cyber-attacks occurring with a 90% confidence interval, that individual would be considered calibrated if – in the long run -- 90% of the forecasts are accurate. (For a great overview of calibration, see the paper Calibration of Probabilities: The State of the Art to 1980 written by Lichtenstein, Fischhoff and Phillips). Study after study shows that humans are not good estimators of probabilities, and most are overconfident in their estimates. (See footnotes at the end for a partial list).

When bookmakers make a bad forecast, they lose something – money. Sometimes, they lose a lot of money. If they make enough bad forecasts, in the long run, they are out of business, or even worse. This is the secret sauce – bookmakers receive constant, consistent feedback on the quality of their prior forecasts and have a built-in incentive, money, to improve continually. Bookmakers wait a few days to learn the outcome of a horserace and adjust accordingly. Cyber risk managers are missing this feedback loop – data breach and other incident forecasts are years or decades in the future. Compounding the problem, horserace forecasts are binary: win or lose, within a fixed timeframe. Cyber risk forecasts are not. The timeline is not fixed; “winning” and “losing” are shades of grey and dependent on other influencing factors, like detection capabilities.

It turns out we can simulate the losses a bookmaker experiences with games, like the Equivalent Bet test, urn problems and general trivia questions designed to gauge calibration. These games trigger loss aversion in our minds and, with feedback and consistent practice, our probability estimates will improve. When we go back to real life and make cyber forecasts, those skills carry forward.

Integrating the Equivalent Bet Test into your Risk Program

I’ve found that it’s most effective to present the Equivalent Bet Test as a training aid when teaching people the basics of estimation. I explain the game, rules and the outcomes: placing money down to play, asking for an estimate, offering a choice between games, spinning a real wheel and the big finale – what the estimator’s choice of game reveals about their cognitive biases.

Estimators need to ask themselves this simple question each time they make an estimate: “If my own money was at stake, which bet would I take: my estimate that has a 90% chance of being right, or take a spin on a wheel in which there's a 90% chance of winning." Critically think about each of the choices, then adjust the range on the estimate until the estimator is truly ambivalent about the two choices. At this point, in the estimator’s mind, both games have an equal chance of winning or losing.